fread cannot read a .csv file of 300Mb with 200Gb of free RAM and falls with an error

Error: cannot allocate vector of size 5.6 Mb

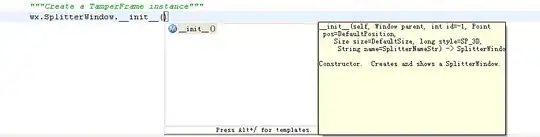

Task manager screenshot:

The file contains 373522 rows and 401 columns of which 1 column (identifier) is character and 400 columns are numeric.

UPD: this issue seems to be not related to lack of RAM but to freed allocation mechanism, because as mentioned above I have 200Gb free RAM and want to reed only 300Mb csv file with numeric columns

UPD2: VERBOSE output added

How do I read the file:

lang-r

data <- fread(

file = fn,

sep = ",",

stringsAsFactors = FALSE,

data.table = FALSE,

nrows = 1

)

col_classes <- c(

"character",

rep("numeric", ncol(data) - 1)

)

data <- fread(

file = fn,

sep = ",",

na.strings = c("NA", "na", "NULL", "null", ""),

stringsAsFactors = FALSE,

colClasses = col_classes,

showProgress = TRUE,

data.table = FALSE

)

lang-r

> file.size(fn)

[1] 331201365

Session info:

lang-r

> sessionInfo()

R version 3.5.2 (2018-12-20)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows Server >= 2012 x64 (build 9200)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.1252 LC_CTYPE=English_United States.1252

[3] LC_MONETARY=English_United States.1252 LC_NUMERIC=C

[5] LC_TIME=English_United States.1252

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] stringr_1.3.1 purrr_0.2.5 dplyr_0.7.8 data.table_1.12.0 crayon_1.3.4

loaded via a namespace (and not attached):

[1] Rcpp_1.0.0 assertthat_0.2.0 R6_2.3.0 magrittr_1.5 pillar_1.3.1 stringi_1.2.4

[7] rlang_0.3.1 rstudioapi_0.9.0 bindrcpp_0.2.2 tools_3.5.2 glue_1.3.0 yaml_2.2.0

[13] compiler_3.5.2 pkgconfig_2.0.2 tidyselect_0.2.5 bindr_0.1.1 tibble_2.0.1

Please let me know if VERBOSE output of freed required.

lang-r

omp_get_max_threads() = 64

omp_get_thread_limit() = 2147483647

DTthreads = 0

RestoreAfterFork = true

Input contains no \n. Taking this to be a filename to open

[01] Check arguments

Using 64 threads (omp_get_max_threads()=64, nth=64)

NAstrings = [<<NA>>, <<na>>, <<NULL>>, <<null>>, <<>>]

None of the NAstrings look like numbers.

show progress = 1

0/1 column will be read as integer

[02] Opening the file

Opening file I:/secret_file_name.csv

File opened, size = 315.9MB (331201365 bytes).

Memory mapped ok

[03] Detect and skip BOM

[04] Arrange mmap to be \0 terminated

\n has been found in the input and different lines can end with different line endings (e.g. mixed \n and \r\n in one file). This is common and ideal.

[05] Skipping initial rows if needed

Positioned on line 1 starting: <<id,column_1>>

[06] Detect separator, quoting rule, and ncolumns

Using supplied sep ','

sep=',' with 100 lines of 301 fields using quote rule 0

Detected 301 columns on line 1. This line is either column names or first data row. Line starts as: <<id,column_1>>

Quote rule picked = 0

fill=false and the most number of columns found is 301

[07] Detect column types, good nrow estimate and whether first row is column names

Number of sampling jump points = 100 because (331201363 bytes from row 1 to eof) / (2 * 163458 jump0size) == 1013

Type codes (jump 000) : A7777777777777777777777557777777557777775577777777755777777755777555777555777777...7777777777 Quote rule 0

Type codes (jump 002) : A7777777777777777777777557777777557777775577777777755777777755777777777557777777...7777777777 Quote rule 0

Type codes (jump 020) : A7777777777777777777777557777777557777775577777777755777777755777777777557777777...7777777777 Quote rule 0

Type codes (jump 027) : A7777777777777777777777777777777557777775577777777755777777777777777777557777777...7777777777 Quote rule 0

Type codes (jump 058) : A7777777777777777777777777777777777777777777777777777777777777777777777557777777...7777777777 Quote rule 0

Type codes (jump 100) : A7777777777777777777777777777777777777777777777777777777777777777777777557777777...7777777777 Quote rule 0

'header' determined to be true due to column 2 containing a string on row 1 and a lower type (float64) in the rest of the 10059 sample rows

=====

Sampled 10059 rows (handled \n inside quoted fields) at 101 jump points

Bytes from first data row on line 2 to the end of last row: 331159811

Line length: mean=903.71 sd=756.62 min=326 max=4068

Estimated number of rows: 331159811 / 903.71 = 366444

Initial alloc = 732888 rows (366444 + 100%) using bytes/max(mean-2*sd,min) clamped between [1.1*estn, 2.0*estn]

=====

[08] Assign column names

[09] Apply user overrides on column types

After 6 type and 0 drop user overrides : A7777777777777777777777777777777777777777777777777777777777777777777777777777777...7777777777

[10] Allocate memory for the datatable

Allocating 301 column slots (301 - 0 dropped) with 732888 rows

Error: cannot allocate vector of size 5.6 Mb