I need some help in order to understand how accuracy is calculated when fitting a model in Keras. This is a sample history of training the model:

Train on 340 samples, validate on 60 samples

Epoch 1/100

340/340 [==============================] - 5s 13ms/step - loss: 0.8081 - acc: 0.7559 - val_loss: 0.1393 - val_acc: 1.0000

Epoch 2/100

340/340 [==============================] - 3s 9ms/step - loss: 0.7815 - acc: 0.7647 - val_loss: 0.1367 - val_acc: 1.0000

Epoch 3/100

340/340 [==============================] - 3s 10ms/step - loss: 0.8042 - acc: 0.7706 - val_loss: 0.1370 - val_acc: 1.0000

...

Epoch 25/100

340/340 [==============================] - 3s 9ms/step - loss: 0.6006 - acc: 0.8029 - val_loss: 0.2418 - val_acc: 0.9333

Epoch 26/100

340/340 [==============================] - 3s 9ms/step - loss: 0.5799 - acc: 0.8235 - val_loss: 0.3004 - val_acc: 0.8833

So, validation accuracy is 1 in the first epochs? How can the validation accuracy be better than the training accuracy?

This are figures that show all values of accuracy and loss:

Then I use sklearn metrics to evaluate final results:

def evaluate(predicted_outcome, expected_outcome):

f1_score = metrics.f1_score(expected_outcome, predicted_outcome, average='weighted')

balanced_accuracy_score = metrics.balanced_accuracy_score(expected_outcome, predicted_outcome)

print('****************************')

print('| MODEL PERFORMANCE REPORT |')

print('****************************')

print('Average F1 score = {:0.2f}.'.format(f1_score))

print('Balanced accuracy score = {:0.2f}.'.format(balanced_accuracy_score))

print('Confusion matrix')

print(metrics.confusion_matrix(expected_outcome, predicted_outcome))

print('Other metrics')

print(metrics.classification_report(expected_outcome, predicted_outcome))

I get this output (as you can see, the results are terrible):

****************************

| MODEL PERFORMANCE REPORT |

****************************

Average F1 score = 0.25.

Balanced accuracy score = 0.32.

Confusion matrix

[[ 7 24 2 40]

[ 11 70 4 269]

[ 0 0 0 48]

[ 0 0 0 6]]

Other metrics

precision recall f1-score support

0 0.39 0.10 0.15 73

1 0.74 0.20 0.31 354

2 0.00 0.00 0.00 48

3 0.02 1.00 0.03 6

micro avg 0.17 0.17 0.17 481

macro avg 0.29 0.32 0.12 481

weighted avg 0.61 0.17 0.25 481

Why the accuracy and loss values of Keras fit functions are so different from the values of sklearn metrics?

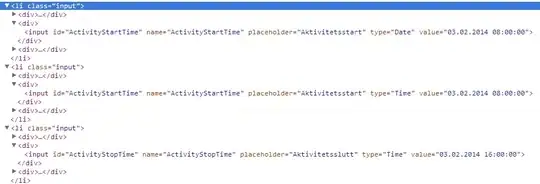

This is my model, in case it helps:

model = Sequential()

model.add(LSTM(

units=100, # the number of hidden states

return_sequences=True,

input_shape=(timestamps,nb_features),

dropout=0.2,

recurrent_dropout=0.2

)

)

model.add(Dropout(0.2))

model.add(Flatten())

model.add(Dense(units=nb_classes,

activation='softmax'))

model.compile(loss="categorical_crossentropy",

metrics = ['accuracy'],

optimizer='adadelta')

Input data dimensions:

400 train sequences

481 test sequences

X_train shape: (400, 20, 17)

X_test shape: (481, 20, 17)

y_train shape: (400, 4)

y_test shape: (481, 4)

This is how I apply sklearn metrics:

testPredict = model.predict(np.array(X_test))

y_test = np.argmax(y_test.values, axis=1)

y_pred = np.argmax(testPredict, axis=1)

evaluate(y_pred, y_test)

It looks that I miss something.