To directly answer the question, no it's not impossible to get a fast FCP label. There's more to the question so I'll try to elaborate.

Another way to phrase the "fast" criteria is: "Do at least 90% of user experiences have an FCP less than 1 second?"

Why 90%? Because it's inclusive of a huge proportion of user experiences. As the PSI docs say:

Our goal is to make sure that pages work well for the majority of users. By focusing on 90th and 95th percentile values for our metrics, this ensures that pages meet a minimum standard of performance under the most difficult device and network conditions.

Why 1 second? It's a subjective value for how quickly users expect the page to start showing meaningful progress. After 1 second, users may become distracted or even frustrated. Of course the holy grail is to have instant loading, but this is chosen as a realistic benchmark to strive towards.

So at worst 10% of the FCP experience is 1 second or slower. That specific kind of guarantee is a high enough bar to be confident that users ~consistently have a fast experience.

That explains why the bar is set where it is. To the question of how realistic it is to achieve, we can actually answer that using the publicly available CrUX data on BigQuery.

#standardSQL

SELECT

p90,

COUNT(0) AS numOrigins

FROM (

SELECT

origin,

MIN(start) AS p90

FROM (

SELECT

origin,

bin.start,

SUM(bin.density) OVER (PARTITION BY origin ORDER BY bin.start) AS cdf

FROM

`chrome-ux-report.all.201901`,

UNNEST(first_contentful_paint.histogram.bin) AS bin)

WHERE

cdf >= 0.9

GROUP BY

origin)

GROUP BY

p90

ORDER BY

p90

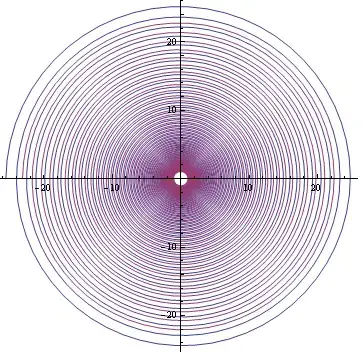

This is a query that counts where in the FCP histogram origins have their 90th percentile. If that sounds confusing, here's a visualization:

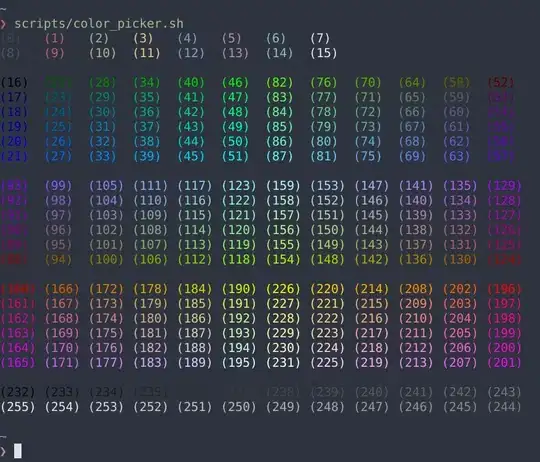

Where the red cumulative distribution line crosses the 1000ms mark tells us the percent of origins who would be labelled as fast. It isn't very many; just 2% or 110153 origins in the dataset.

Anecdotally, browsing through the list of "fast FCP" origins, many of them have .jp and .kr TLDs. It's reasonable to assume they are localized Japanese and Korean websites whose users are almost entirely from those countries. And these are countries with fast internet speeds. So naturally it'd be easier to serve a fast website 90+% of the time when your users have consistently fast connection speeds.

Another thing we can do to get a sense of origin popularity is join it with the Alexa Top 1 Million Domains list:

#standardSQL

SELECT

Alexa_rank,

Alexa_domain,

COUNT(0) AS numOrigins,

ARRAY_AGG(origin LIMIT 3) AS originSample

FROM (

SELECT

origin,

MIN(start) AS p90

FROM (

SELECT

origin,

bin.start,

SUM(bin.density) OVER (PARTITION BY origin ORDER BY bin.start) AS cdf

FROM

`chrome-ux-report.all.201901`,

UNNEST(first_contentful_paint.histogram.bin) AS bin)

WHERE

cdf >= 0.9

GROUP BY

origin)

JOIN

`httparchive.urls.20170315`

ON

NET.REG_DOMAIN(origin) = Alexa_domain

WHERE

p90 < 1000

GROUP BY

Alexa_rank,

Alexa_domain

ORDER BY

Alexa_rank

There are 35985 origins whose domains are in the top 1M. You can run the query for yourself to see the full results.

You can see that there are ~100 origins on top 20 domains that qualify as fast for FCP. Cherrypicking some interesting examples further down the list:

Big caveat that these origins are not necessarily top ranked, just their domains. Without having origin ranking data this is the best approximation I can do.

Lesser caveat that BigQuery and PSI are slightly different datasets and PSI segments by desktop/mobile while my analysis combines them together. So this research is not a perfect representation of what to expect on PSI.

Finally, I just want to address something else that was in the question about getting 100 scores in Lighthouse. A score of 100 doesn't necessarily mean that there isn't anything left to improve. Synthetic tests like that need to be calibrated to be representative of the actual user experience. So for example the performance audits might start failing if tested under conditions representative of user experiences in the Philippines. Actually running the test from that location might turn up performance problems, eg content distribution issues, in addition to the conditions that we could simulate anywhere like connection speed.

To summarize everything:

- The bar is set high because we want to ensure that a vast majority of user experiences are fast

- Many websites are already exceeding this bar, albeit a small proportion of the overall dataset

- The Alexa ranking shows us that it's possible to have a heavily trafficked website and also provide consistently fast experiences