I am currently working on a project which requires the contents(the camera image along with the AR objects for each frame) of the SceneView in a byte array format to stream the data.

I have tried to Mirror the SceneView to a MediaCodec encoder's input surface and use the MediaCodec's output buffer in an asynchronous manner based on what I understood from the MediaRecorder sample.

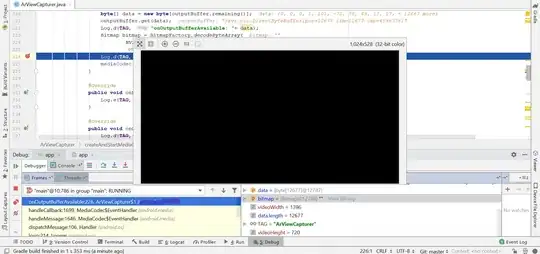

I have been unable to get it to work as I expect it to. The output buffer from the MediaCodec's callback either displays a black screen (when converted to bitmap) or it has way too little buffer contents (<100 bytes).

I have a feeling that the mirroring to a surface is not occurring as I expect it to, It would be much appreciated if someone could provide a sample that showcases the proper way to use MediaCodec with SceneForm

The code I am currently using for accessing the ByteBuffer from MediaCodec :

MediaFormat format = MediaFormat.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC,

arFragment.getArSceneView().getWidth(),

arFragment.getArSceneView().getHeight());

// Set some properties to prevent configure() from throwing an unhelpful exception.

format.setInteger(MediaFormat.KEY_COLOR_FORMAT,

MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface);

format.setInteger(MediaFormat.KEY_BIT_RATE, 50000);

format.setInteger(MediaFormat.KEY_FRAME_RATE, 30);

format.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 0); //All key frame stream

MediaCodec mediaCodec = MediaCodec.createEncoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

mediaCodec.setCallback(new MediaCodec.Callback() {

@Override

public void onInputBufferAvailable(@NonNull MediaCodec codec, int index) {

}

@Override

public void onOutputBufferAvailable(@NonNull MediaCodec codec, int index, @NonNull MediaCodec.BufferInfo info) {

ByteBuffer outputBuffer = mediaCodec.getOutputBuffer(index);

outputBuffer.position(info.offset);

outputBuffer.limit(info.offset + info.size);

byte[] data = new byte[outputBuffer.remaining()];

outputBuffer.get(data);

Bitmap bitmap = BitmapFactory.decodeByteArray(

NV21toJPEG(data, videoWidth, videoHeight, 100),

0, data.length);

Log.d(TAG, "onOutputBufferAvailable: "+bitmap);

mediaCodec.releaseOutputBuffer(index, false);

}

@Override

public void onError(@NonNull MediaCodec codec, @NonNull MediaCodec.CodecException e) {

Log.e(TAG, "onError: ");

}

@Override

public void onOutputFormatChanged(@NonNull MediaCodec codec, @NonNull MediaFormat format)

{

Log.d(TAG, "onOutputFormatChanged: " + format);

}

});

mediaCodec.configure(format, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

Surface surface = mediaCodec.createInputSurface();

mediaCodec.start();

arFragment.getArSceneView().startMirroringToSurface(surface, 0, 0, arFragment.getArSceneView().getWidth(), arFragment.getArSceneView().getHeight());

The generated bitmap :