I'm running a nested loop via using %dopar% to generate dummy dataset for experience purpose.

References link: R nested foreach %dopar% in outer loop and %do% in inner loop

sample dataset

set.seed(123)

n = 10000 #number of unique IDs (10k as trial) , real data consits of 50k unique IDs

ID <- paste(LETTERS[1:8],sample(n),sep = "")

year <- c('2015','2016','2017','2018')

month <- c('1','2','3','4','5','6','7','8','9','10','11','12')

pre-defined library

library(foreach)

library(data.table)

library(doParallel)

# parallel processing setting

cl <- makeCluster(detectCores() - 1)

registerDoParallel(cl)

Test 1: %dopar% script

system.time(

output_table <- foreach(i = seq_along(ID), .combine=rbind, .packages="data.table") %:%

foreach(j = seq_along(year), .combine=rbind, .packages="data.table") %:%

foreach(k = seq_along(month), .combine=rbind, .packages="data.table") %dopar% {

data.table::data.table(

mbr_code = ID[i],

year = year[j],

month = month[k]

)

}

)

stopCluster(cl)

#---------#

# runtime #

#---------#

> user system elapsed

> 1043.31 66.83 1171.08

Test 2: %do% script

system.time(

output_table <- foreach(i = seq_along(ID), .combine=rbind, .packages="data.table") %:%

foreach(j = seq_along(year), .combine=rbind, .packages="data.table") %:%

foreach(k = seq_along(month), .combine=rbind, .packages="data.table") %do% {

data.table::data.table(

mbr_code = ID[i],

year = year[j],

month = month[k]

)

}

)

stopCluster(cl)

#---------#

# runtime #

#---------#

> user system elapsed

> 1101.85 1.02 1110.55

Expected output results

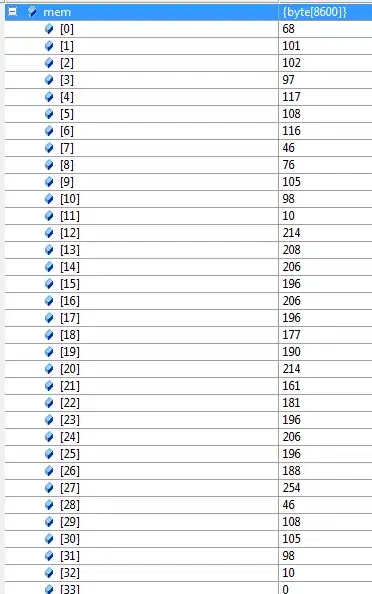

> view(output_table)

Problem

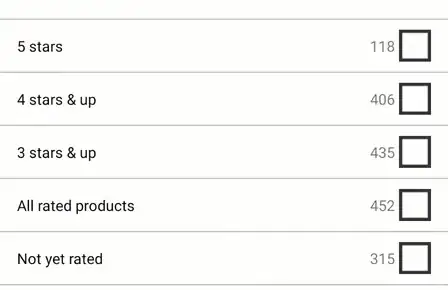

when i run on %dopar% i did monitor my machine's CPU performance using Resource Monitor and i noticed the CPUs are not fully utilised.

Question

I did try to run above script (test1 and test2) on my machine i5, 4 cores. But it seems like the run time for both %do% and %dopar% are closed to each other. It's my script design issue? My real data consists of 50k unique IDs, meaning will took very long time if running in %do%, how can i fully utilised my machine CPUs to reduce run time?