I'm trying to process an XML file that claims (correctly I believe) to be encoded in 7-bit ASCII itself, but it contains text values that include character entities like × that resolve to Unicode characters.

The problem is (I think) is that the processor (the version of Xalan bundled with Treebeard) is resolving the character entities and turning them into gobbledegook before the XSLT stylesheet even gets to touch the content.

I've put together a stripped-down test case below-

XML Input Data

<?xml version="1.0" encoding="ascii"?>

<root>

<unit Code="[Btu_39]" CODE="[BTU_39]" isMetric="no" class="heat">

<name>British thermal unit at 39 °F</name>

<printSymbol>Btu<sub>39°F</sub>

</printSymbol>

<property>energy</property>

<value Unit="kJ" UNIT="kJ" value="1.05967">1.05967</value>

</unit>

</root>

XSLT Stylesheet

<xsl:stylesheet

version="1.0"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform">

<xsl:output method="text" encoding="utf-8" />

<xsl:variable name="sep" select='";"' />

<xsl:variable name="mult" select='"×"' />

<xsl:variable name="crlf" select='" "' />

<xsl:variable name="lf" select='" "' />

<xsl:strip-space elements="name printSymbol value" />

<xsl:template match="/root">

<xsl:apply-templates select="*" />

</xsl:template>

<xsl:template match="/root/*">

Type: <xsl:value-of select="name()" />

Code: <xsl:value-of select="@Code" />

CODE: <xsl:value-of select="@CODE" />

Description: <xsl:apply-templates select="name" />

Print: <xsl:apply-templates select="printSymbol" />

Property: <xsl:apply-templates select="property" />

Value: <xsl:apply-templates select="value" />

<xsl:value-of select="$lf" />

</xsl:template>

<xsl:template match="name|printSymbol|property">

<xsl:value-of select="text()" />

</xsl:template>

<xsl:template match="value">

<xsl:value-of select="concat(@value, $mult, @Unit, $lf)" />

</xsl:template>

</xsl:stylesheet>

Output- Note the degree symbols (for deg. F) are mangled

Type: unit

Code: [Btu_39]

CODE: [BTU_39]

Description: British thermal unit at 39 °F

Print: Btu

Property: energy

Value: 1.05967×kJ

I've found similar questions to this in the programming sections, and one answer has been to pre-process the input file to escape or encode the character entities, but in this case i'm using XSLT "bare" with no other languaage involved, so a pure XSLT solution is really what i need.

Ideas or links to answers much appreciated.

--UPDATE-- MY initial guess was wrong. Some experimenting with other output formats shows that (for example) with the output method set to HTML, the problem characters are output as HTML entities. This indicates the characters are making it into the translation unmangled. I assume it must be the output processing causing the problem.

As requested I have taken a small chunk of the text (the "at 39 °F" part of the name element) and dumped the hex of the input and output strings.

--UPDATE-- Some digging showed that-

- The original tool (Treebeard) was transforming the output to UTF-8, but then: (a) Displaying it incorrectly (I think as cp1252); (b) Transforming the output to cp1252 when writing the output to a file.

- The second tool (Simple XSLT Transform) was displaying the utf8 output correctly on-screen, but still transforming to cp1252 when writing to disk.

- A thread on this site confirmed that Java picks up a default file encoding when it starts up. As both tools are written in Java this was causing the file output problem.

I followed the recommendation in that thread to set up a windows environment variable as follows: JAVA_TOOL_OPTIONS = Dfile.encoding=UTF8

Success!

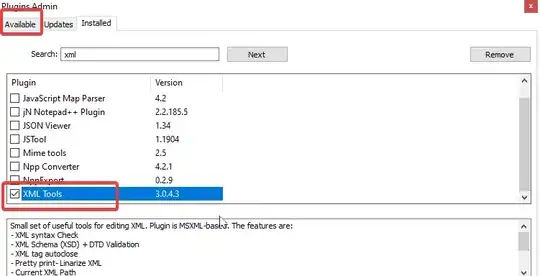

The file is written to utf8 and can be opened successfully in either Notepad++ or Excel (PowerQuery). You have to manually set the "cp65001" code page in PowerQuery, but it works.

Thank you to those who replied, you helped get me on the right track.