I need some advice on how to diagnose slow Elasticseach queries.

Setup

- 1 node cluster in ElasticCloud (1 primary shard, 0 replicas). note: ElasticCloud = no slowlog. (also yep, i know i should have more nodes.. but this is just DEV)

- Interacting with cluster via my Azure .NET Web App, using the NEST library

Behaviour

- Most response times for my web server are 50-80ms

- All query times in ES (e.g took) are < 5ms.

- Network latency between my web server and ElasticCloud is about 15ms

Problem - Sometimes, the response times jump between 100-200ms, but the took is still 1ms. I was able to replicate this behaviour on local too (using ElasticSearch docker).

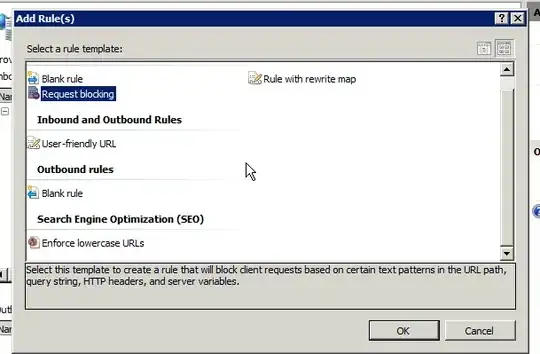

Here's a trace from Fiddler i captured, which is the call to Elasticsearch from my app:

ClientConnected: 17:28:44.325

ClientBeginRequest: 17:34:34.953

GotRequestHeaders: 17:34:34.953

ClientDoneRequest: 17:34:34.953

Determine Gateway: 0ms

DNS Lookup: 0ms

TCP/IP Connect: 0ms

HTTPS Handshake: 0ms

ServerConnected: 17:34:27.538

FiddlerBeginRequest: 17:34:34.953

ServerGotRequest: 17:34:34.953

ServerBeginResponse: 17:34:35.171

GotResponseHeaders: 17:34:35.171

ServerDoneResponse: 17:34:35.172

ClientBeginResponse: 17:34:35.172

ClientDoneResponse: 17:34:35.178

So, above is saying the Elasticsearch cluster took 218ms to process the request. However the took is 1ms.

How can i track this slow request down? Clearly it's not the query speed (since took is low), so it must be something in the cluster.

Any advice?

EDIT

Here is some data from Kibana, during a 15 min load test i did:

So.. based on my noob-ish analysis nothing looks bad there.

- Search latency / latency is quick (< 5ms)

- JVM heap seems fine

- CPU fine

- No excessive GC

Yet here's what i see from my load testing tool:

Here's the stats from my perf monitoring tool. You can clearly see the spikes and the slow outliers:

Not sure where else to go from here? Is there some other metric i should be looking for?