I'm trying to use qark analyzer to analyze a set of apks in multiprocessing using python.

Trying to analyze a set of 100 apks I've found that the application I wrote to automatyze the analysis is VERY SLOW. Last analysis I ran stayed in execution for about 20 hours and then I manually turned off my pc, as it had become unusable, probably due to the heavy RAM usage... The analysis was even harmful, messing up my Windows partition and preventing me to see data inside the partition and Windows to boot anymore (I run the analysis from ubuntu, but into my Windows partition for a matter of free disk space)

The core of the class executed in the process is something very similar to

def scanApk(self):

try:

#Creating a directory for qark build files (decompiled sources etc...)

buildDirectoryPath = os.path.join(os.path.join(self.APKANALYSIS_ROOT_DIRECTORY, "qarkApkBuilds"), "build_" + self.apkInfo["package_name"])

os.mkdir(buildDirectoryPath)

start = timer()

subp = subprocess.Popen(self.binPath + "/qark --report-type json --apk \"" + self.apkPath + "\"", cwd=buildDirectoryPath, shell=True, stdout=subprocess.PIPE, stderr=subprocess.PIPE,

preexec_fn=os.setsid)

#Setting a timeout of 6 hours for the analysis

out, err = subp.communicate(timeout= 6 * (60 * 60))

self.saveOutAndErr(out, err)

if subp.returncode != 0:

raise subprocess.CalledProcessError(subp.returncode, "qark")

self.printAnalysisLasting(start)

#Moving qark report into qark reports collecting directory

subp = subprocess.Popen("mv \"" + self.defaultReportsPath + "/" + self.apkInfo["package_name"] + ".json\" " + "\"" + self.toolReportsDirectory + "\"", shell=True)

out, err = subp.communicate()

if subp.returncode != 0:

raise subprocess.CalledProcessError(subp.returncode, "qark")

return True

[... subprocess.TimeoutExpired and subprocess.CalledProcessError exceptions handling...]

I use the class in multiprocessing using concurrent.futures' ProcessPoolExecutor like this (scanApk method is called inside analyzeApk method):

with concurrent.futures.ProcessPoolExecutor(max_workers = 10) as executor:

futuresList = []

#Submitting tasks to ProcessPoolExecutor

for apkPath in apksToAnalyzePaths:

...

qarkAnalyzer = QarkAnalyzer(...)

futuresList.append(executor.submit(qarkAnalyzer.analyzeApk))

for future in futuresList:

future.result()

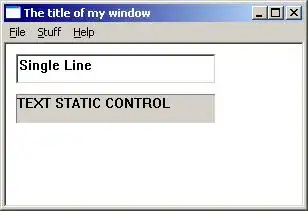

This, instead, is a snapshot of the processes status during an analysis of 2 apks showed by htop:

I tested the application with an analysis of 2 apks and it seemed to behave "nice"... I experienced an increase in execution time of the qark apk analysis respect to the execution of the single analysis on that apk, but I attributed it to multiprocessing and, saw that it was not too much, I thought it could be ok... But for 100 apks the execution led to a disaster.

Can someone help to find out what's happening here? Why is the analysis so slow? How could it mess up my Windows partition? The RAM memory charge is too heavy for an analysis of such a number of apks? It is due to an improper use of processes in my application? How can I do this thing right?