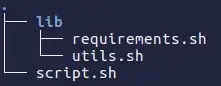

I have this code:

def somefunc(self):

...

if self.mynums>= len(self.totalnums):

if 1 == 1: return self.crawlSubLinks()

for num in self.nums:

if not 'hello' in num: continue

if 0 == 1:

#if though this is never reached, when using yield, the crawler stops execution after the return statement at the end.

#When using return instead of yield, the execution continues as expected - why?

print("in it!");

yield SplashRequest(numfunc['asx'], self.xo, endpoint ='execute', args={'lua_source': self.scripts['xoscript']})

def crawlSubLinks(self):

self.start_time = timer()

print("IN CRAWL SUB LINKS")

for link in self.numLinks:

yield scrapy.Request(link callback=self.examinenum, dont_filter=True)

As you can see, the SplashRequest is never reached, so its implementation is not important in this case. So the goal is to keep sending requests by returning self.crawlSubLinks. Now here is the problem:

When I use return before the SplashRequest that is never reached, the crawler continues its execution as expected by processing the new requests from crawlSubLinks. However, for some reason, when I use yield before the SplashRequest that is never reached, the crawler stop after the return statement! Whether I use yield or return in a line that is never executed should not matter at all, right?

Why is this? I have been told that this has something to do with the behavior of Python, only. But how can I then have a yield statement within the for loop while still returning in the if statement above the for loop and not return a generator?