I'm trying to encode RGBA buffers captured from an image (RGBA) source (Desktop/Camera) into raw H264 using Windows Media Foundation, transfer them and decode the raw H264 frames received at the other end in real time. I'm trying to achieve at least 30 fps. The encoder works pretty good but not the decoder.

I understand Microsoft WMF MFTs buffer up to 30 frames before emitting the encoded/decoded data.

The image source would emit frames only when there is a change occurs and not a continuous stream of RGBA buffers, so my intention is to obtain a buffer of encoded/decoded data for each and every input buffer to the respective MFT so that I can stream the data in real time and also render it.

Both the encoder and decoder are able to emit at least 10 to 15 fps when I make the image source to send continuous changes (by stimulating the changes). The encoder is able to utilize hardware acceleration support. I'm able to achieve up to 30 fps in the encoder end, and I'm yet to implement hardware assisted decoding using DirectX surfaces. Here the problem is not the frame rate but buffering of data by MFTs.

So, I tried to drain the decoder MFT by sending the MFT_MESSAGE_COMMAND_DRAIN command, and repeatedly calling ProcessOutput until the decoder returns MF_E_TRANSFORM_NEED_MORE_INPUT. What happens now is the decoder now emits only one frame per 30 input h264 buffers, I tested it with even a continuous stream of data, and the behavior is same. Looks like the decoder drops all the intermediate frames in a GOP.

It's okay for me if it buffers only first few frames, but my decoder implementation outputs only when it's buffer is full all the time even after the SPS and PPS parsing phase.

I come across Google's chromium source code (https://github.com/adobe/chromium/blob/master/content/common/gpu/media/dxva_video_decode_accelerator.cc), they follow the same approach.

mpDecoder->ProcessMessage(MFT_MESSAGE_COMMAND_DRAIN, NULL);

My implementation is based on https://github.com/GameTechDev/ChatHeads/blob/master/VideoStreaming/EncodeTransform.cpp

and

https://github.com/GameTechDev/ChatHeads/blob/master/VideoStreaming/DecodeTransform.cpp

My questions are, Am I missing something? Is Windows Media Foundation suitable for real-time streaming?. Whether draining the encoder and decoder would work for real-time use cases?.

There are only two options for me, make this WMF work of real-time use case or go with something like Intel's QuickSync. I chose WMF for my POC because Windows Media Foundation implicitly supports Hardware/GPU/Software fallbacks in case any of the MFT is unavailable and it internally chooses best available MFT without much coding.

I'm facing video quality issues, though the bitrate property is set to 3Mbps. But it is least in priority compared to buffering problems. I have been beating my head around the keyboard for weeks, This is so hard to fix. Any help would be appreciated.

Code:

Encoder setup:

IMFAttributes* attributes = 0;

HRESULT hr = MFCreateAttributes(&attributes, 0);

if (attributes)

{

//attributes->SetUINT32(MF_SINK_WRITER_DISABLE_THROTTLING, TRUE);

attributes->SetGUID(MF_TRANSCODE_CONTAINERTYPE, MFTranscodeContainerType_MPEG4);

}//end if (attributes)

hr = MFCreateMediaType(&pMediaTypeOut);

// Set the output media type.

if (SUCCEEDED(hr))

{

hr = MFCreateMediaType(&pMediaTypeOut);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetGUID(MF_MT_MAJOR_TYPE, MFMediaType_Video);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetGUID(MF_MT_SUBTYPE, cVideoEncodingFormat); // MFVideoFormat_H264

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetUINT32(MF_MT_AVG_BITRATE, VIDEO_BIT_RATE); //18000000

}

if (SUCCEEDED(hr))

{

hr = MFSetAttributeRatio(pMediaTypeOut, MF_MT_FRAME_RATE, VIDEO_FPS, 1); // 30

}

if (SUCCEEDED(hr))

{

hr = MFSetAttributeSize(pMediaTypeOut, MF_MT_FRAME_SIZE, mStreamWidth, mStreamHeight);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetUINT32(MF_MT_INTERLACE_MODE, MFVideoInterlace_Progressive);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetUINT32(MF_MT_MPEG2_PROFILE, eAVEncH264VProfile_High);

}

if (SUCCEEDED(hr))

{

hr = MFSetAttributeRatio(pMediaTypeOut, MF_MT_PIXEL_ASPECT_RATIO, 1, 1);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetUINT32(MF_MT_MAX_KEYFRAME_SPACING, 16);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetUINT32(CODECAPI_AVEncCommonRateControlMode, eAVEncCommonRateControlMode_UnconstrainedVBR);//eAVEncCommonRateControlMode_Quality, eAVEncCommonRateControlMode_UnconstrainedCBR);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetUINT32(CODECAPI_AVEncCommonQuality, 100);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetUINT32(MF_MT_FIXED_SIZE_SAMPLES, FALSE);

}

if (SUCCEEDED(hr))

{

BOOL allSamplesIndependent = TRUE;

hr = pMediaTypeOut->SetUINT32(MF_MT_ALL_SAMPLES_INDEPENDENT, allSamplesIndependent);

}

if (SUCCEEDED(hr))

{

hr = pMediaTypeOut->SetUINT32(MF_MT_COMPRESSED, TRUE);

}

if (SUCCEEDED(hr))

{

hr = mpEncoder->SetOutputType(0, pMediaTypeOut, 0);

}

// Process the incoming sample. Ignore the timestamp & duration parameters, we just render the data in real-time.

HRESULT ProcessSample(IMFSample **ppSample, LONGLONG& time, LONGLONG& duration, TransformOutput& oDtn)

{

IMFMediaBuffer *buffer = nullptr;

DWORD bufferSize;

HRESULT hr = S_FALSE;

if (ppSample)

{

hr = (*ppSample)->ConvertToContiguousBuffer(&buffer);

if (SUCCEEDED(hr))

{

buffer->GetCurrentLength(&bufferSize);

hr = ProcessInput(ppSample);

if (SUCCEEDED(hr))

{

//hr = mpDecoder->ProcessMessage(MFT_MESSAGE_COMMAND_DRAIN, NULL);

//if (SUCCEEDED(hr))

{

while (hr != MF_E_TRANSFORM_NEED_MORE_INPUT)

{

hr = ProcessOutput(time, duration, oDtn);

}

}

}

else

{

if (hr == MF_E_NOTACCEPTING)

{

while (hr != MF_E_TRANSFORM_NEED_MORE_INPUT)

{

hr = ProcessOutput(time, duration, oDtn);

}

}

}

}

}

return (hr == MF_E_TRANSFORM_NEED_MORE_INPUT ? (oDtn.numBytes > 0 ? oDtn.returnCode : hr) : hr);

}

// Finds and returns the h264 MFT (given in subtype parameter) if available...otherwise fails.

HRESULT FindDecoder(const GUID& subtype)

{

HRESULT hr = S_OK;

UINT32 count = 0;

IMFActivate **ppActivate = NULL;

MFT_REGISTER_TYPE_INFO info = { 0 };

UINT32 unFlags = MFT_ENUM_FLAG_HARDWARE | MFT_ENUM_FLAG_ASYNCMFT;

info.guidMajorType = MFMediaType_Video;

info.guidSubtype = subtype;

hr = MFTEnumEx(

MFT_CATEGORY_VIDEO_DECODER,

unFlags,

&info,

NULL,

&ppActivate,

&count

);

if (SUCCEEDED(hr) && count == 0)

{

hr = MF_E_TOPO_CODEC_NOT_FOUND;

}

if (SUCCEEDED(hr))

{

hr = ppActivate[0]->ActivateObject(IID_PPV_ARGS(&mpDecoder));

}

CoTaskMemFree(ppActivate);

return hr;

}

// reconstructs the sample from encoded data

HRESULT ProcessData(char *ph264Buffer, DWORD bufferLength, LONGLONG& time, LONGLONG& duration, TransformOutput &dtn)

{

dtn.numBytes = 0;

dtn.pData = NULL;

dtn.returnCode = S_FALSE;

IMFSample *pSample = NULL;

IMFMediaBuffer *pMBuffer = NULL;

// Create a new memory buffer.

HRESULT hr = MFCreateMemoryBuffer(bufferLength, &pMBuffer);

// Lock the buffer and copy the video frame to the buffer.

BYTE *pData = NULL;

if (SUCCEEDED(hr))

hr = pMBuffer->Lock(&pData, NULL, NULL);

if (SUCCEEDED(hr))

memcpy(pData, ph264Buffer, bufferLength);

pMBuffer->SetCurrentLength(bufferLength);

pMBuffer->Unlock();

// Create a media sample and add the buffer to the sample.

if (SUCCEEDED(hr))

hr = MFCreateSample(&pSample);

if (SUCCEEDED(hr))

hr = pSample->AddBuffer(pMBuffer);

LONGLONG sampleTime = time - mStartTime;

// Set the time stamp and the duration.

if (SUCCEEDED(hr))

hr = pSample->SetSampleTime(sampleTime);

if (SUCCEEDED(hr))

hr = pSample->SetSampleDuration(duration);

hr = ProcessSample(&pSample, sampleTime, duration, dtn);

::Release(&pSample);

::Release(&pMBuffer);

return hr;

}

// Process the output sample for the decoder

HRESULT ProcessOutput(LONGLONG& time, LONGLONG& duration, TransformOutput& oDtn/*output*/)

{

IMFMediaBuffer *pBuffer = NULL;

DWORD mftOutFlags;

MFT_OUTPUT_DATA_BUFFER outputDataBuffer;

IMFSample *pMftOutSample = NULL;

MFT_OUTPUT_STREAM_INFO streamInfo;

memset(&outputDataBuffer, 0, sizeof outputDataBuffer);

HRESULT hr = mpDecoder->GetOutputStatus(&mftOutFlags);

if (SUCCEEDED(hr))

{

hr = mpDecoder->GetOutputStreamInfo(0, &streamInfo);

}

if (SUCCEEDED(hr))

{

hr = MFCreateSample(&pMftOutSample);

}

if (SUCCEEDED(hr))

{

hr = MFCreateMemoryBuffer(streamInfo.cbSize, &pBuffer);

}

if (SUCCEEDED(hr))

{

hr = pMftOutSample->AddBuffer(pBuffer);

}

if (SUCCEEDED(hr))

{

DWORD dwStatus = 0;

outputDataBuffer.dwStreamID = 0;

outputDataBuffer.dwStatus = 0;

outputDataBuffer.pEvents = NULL;

outputDataBuffer.pSample = pMftOutSample;

hr = mpDecoder->ProcessOutput(0, 1, &outputDataBuffer, &dwStatus);

}

if (SUCCEEDED(hr))

{

hr = GetDecodedBuffer(outputDataBuffer.pSample, outputDataBuffer, time, duration, oDtn);

}

if (pBuffer)

{

::Release(&pBuffer);

}

if (pMftOutSample)

{

::Release(&pMftOutSample);

}

return hr;

}

// Write the decoded sample out

HRESULT GetDecodedBuffer(IMFSample *pMftOutSample, MFT_OUTPUT_DATA_BUFFER& outputDataBuffer, LONGLONG& time, LONGLONG& duration, TransformOutput& oDtn/*output*/)

{

// ToDo: These two lines are not right. Need to work out where to get timestamp and duration from the H264 decoder MFT.

HRESULT hr = outputDataBuffer.pSample->SetSampleTime(time);

if (SUCCEEDED(hr))

{

hr = outputDataBuffer.pSample->SetSampleDuration(duration);

}

if (SUCCEEDED(hr))

{

hr = pMftOutSample->ConvertToContiguousBuffer(&pDecodedBuffer);

}

if (SUCCEEDED(hr))

{

DWORD bufLength;

hr = pDecodedBuffer->GetCurrentLength(&bufLength);

}

if (SUCCEEDED(hr))

{

byte *pEncodedYUVBuffer;

DWORD buffCurrLen = 0;

DWORD buffMaxLen = 0;

pDecodedBuffer->GetCurrentLength(&buffCurrLen);

pDecodedBuffer->Lock(&pEncodedYUVBuffer, &buffMaxLen, &buffCurrLen);

ColorConversion::YUY2toRGBBuffer(pEncodedYUVBuffer,

buffCurrLen,

mpRGBABuffer,

mStreamWidth,

mStreamHeight,

mbEncodeBackgroundPixels,

mChannelThreshold);

pDecodedBuffer->Unlock();

::Release(&pDecodedBuffer);

oDtn.pData = mpRGBABuffer;

oDtn.numBytes = mStreamWidth * mStreamHeight * 4;

oDtn.returnCode = hr; // will be S_OK..

}

return hr;

}

Update: The decoder's output is satisfactory now after enabling CODECAPI_AVLowLatency Mode, But with 2 seconds delay in the stream compared to the sender, I'm able to achieve 15 to 20fps that's a lot better compared to previous. The quality detoriates when there are more number of changes pushed from the source to the encoder. I'm yet to implement hardware accelerated decoding.

Update2: I figured out that the timestamp and duration settings are the ones that affect the quality of the video if set improperly. The thing is, my image source does not emit frames at a constant rate, but it looks like the encoder and decoders expect constant frame rate. When I set the duration as constant and increment the sample time in constant steps the video quality seems to be better but not the greatest though. I don't think what I'm doing is the correct approach. Is there any way to specify the encoder and decoder about the variable frame rate?

Update3: I'm able to get acceptable performance from both encoders and decoders after setting CODECAPI_AVEncMPVDefaultBPictureCount (0), and CODECAPI_AVEncCommonLowLatency properties. Yet to explore hardware accelerated decoding. I hope I would be able to achieve the best performance if hardware decoding is implemented.

The quality of the video is still poor, edges & curves are not sharp. Text looks blurred, and it's not acceptable. The quality is okay for videos and images but not for texts and shapes.

Update4

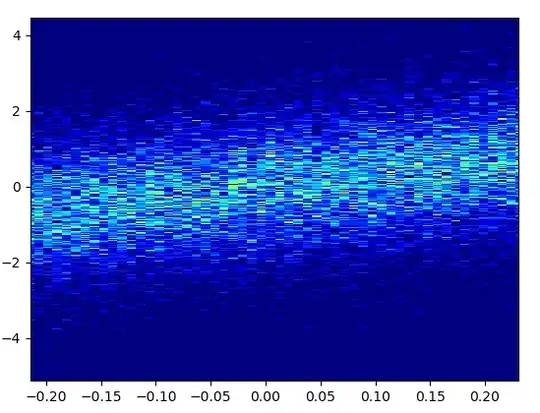

It seems some of the color information is getting lost in the YUV subsampling phase. I tried converting the RGBA buffer to YUV2 and then back, The color loss is visible but not bad though. The loss due to YUV conversion is not as bad as the quality of the image that is being rendered after RGBA-> YUV2 -> H264 -> YUV2 -> RGBA conversion. It's evident that not just YUV2 conversion the sole reason for the loss of quality but also the H264 encoder that further causes aliasing. I would still have obtained a better video quality if the H264 encode doesn't introduce aliasing effects. I'm going to explore WMV CODECs. The only thing that still bothers me is this, the code works pretty well and is able to capture the screen and save the stream in mp4 format in a file. The only difference here is that I'm using Media foundation transform with MFVideoFormat_YUY2 input format compared to the sink writer approach with MFVideoFormat_RGB32 as input type in the mentioned code. I still have some hope that it is possible to achieve better quality through Media Foundation itself. The thing is MFTEnum/ProcessInput fails if I specify MFVideoFormat_ARGB32 as input format in MFT_REGISTER_TYPE_INFO (MFTEnum)/SetInputType respectively.

Original:

Decoded image (After RGBA -> YUV2 -> H264 -> YUV2 -> RGBA conversion):

Click to open in the new tab to view the full image so that you can see the aliasing effect.