i try to understand dilated convolution. I already familiar with increasing the size of the kernel by filling the gaps with zeros. Its usefull to cover a bigger area and get a better understanding about larger objects. But please can someone explain me how it is possible that dilated convolutional layers keep the origin resolution of the receptive field. It is used in the deeplabV3+ structure with a atrous rate from 2 to 16. How is it possible to use dilated convolution with a obvious bigger kernel without zero padding and the output size will be consistent.

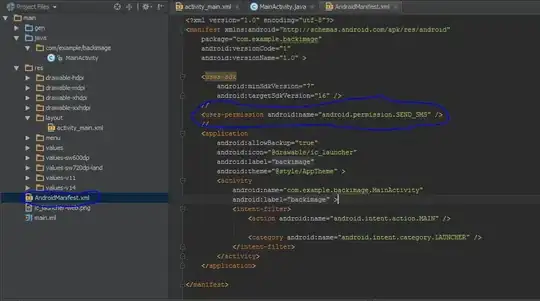

deeplabV3+ structure:

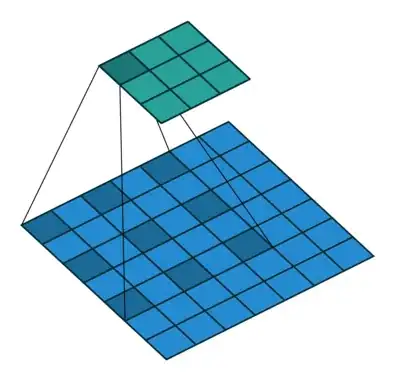

Im confused because when i have a look at these explanation here:

The outputsize (3x3) of the dilated convolution layer is smaller?

Thank you so much for your help!

Lukas