I'm new to tensorflow.js and tensorflow

The context : We have trained a model using custom vision to recognized from an image, the hairlength : short, mid, long. This model was exported and we would like to use it in local with tensorflow js. The exported files from custom vision are a *.pb file and a labels.txt file.

I have used tensorflowjs_converter python script, here is the command I have used to convert a frozen model *.pb in a json model :

tensorflowjs_converter --input_format=tf_frozen_model --output_node_names='model_outputs' --output_json OUTPUT_JSON C:\python\tf_models\hairlength\model.pb C:\python\tf_models\exports\

Then I paste this model.json and shards in the assets folder of my angular client. Then I try to load the model and I give him an image to get the prediction but all I get are indexes values that are way out of bound since I only need 0: long, 1: mid, 2: short hairlength. Here is a capture of the console

This is the class I have used in my client (typescript) for predictions:

import * as tf from '@tensorflow/tfjs';

// import {HAIRLENGTH_LABELS} from './hairlength';

import { FrozenModel } from '@tensorflow/tfjs';

const MODEL = 'assets/models/hairlength/model.json';

const INPUT_NODE_NAME = 'model_outputs';

const OUTPUT_NODE_NAME = 'model_outputs';

const PREPROCESS_DIVISOR = tf.scalar(255 / 2);

export class MobileNetHairLength {

private model: FrozenModel;

private labels = ['long', 'mid', 'short'];

constructor() {}

async load(){

this.model = await tf.loadGraphModel(MODEL);

}

dispose() {

if (this.model) {

this.model.dispose();

}

}

/**

* Infer through MobileNet. This does standard ImageNet pre-processing before

* inferring through the model. This method returns named activations as well

* as softmax logits.

*

* @param input un-preprocessed input Array.

* @return The softmax logits.

*/

predict(input) {

const preprocessedInput = tf.div(

tf.sub(input, PREPROCESS_DIVISOR),

PREPROCESS_DIVISOR);

const reshapedInput =

preprocessedInput.reshape([1, ...preprocessedInput.shape]);

// tslint:disable-next-line:no-unused-expression

return this.model.execute({[INPUT_NODE_NAME]: reshapedInput}, OUTPUT_NODE_NAME);

}

getTopKClasses(logits, topK: number) {

const predictions = tf.tidy(() => {

return tf.softmax(logits);

});

const values = predictions.dataSync();

predictions.dispose();

let predictionList = [];

for (let i = 0; i < values.length; i++) {

predictionList.push({value: values[i], index: i});

}

predictionList = predictionList

.sort((a, b) => {

return b.value - a.value;

})

.slice(0, topK);

console.log(predictionList);

return predictionList.map(x => {

return {label: this.labels[x.index], value: x.value};

});

}

}

And this the class that calls the above one, I just give the canvas element :

import 'babel-polyfill';

import * as tf from '@tensorflow/tfjs';

import { MobileNetHairLength } from './mobilenet-hairlength';

export class PredictionHairLength {

constructor() {}

async predict(canvas) {

const mobileNet = new MobileNetHairLength();

await mobileNet.load();

const pixels = tf.browser.fromPixels(canvas);

console.log('Prediction');

const result = mobileNet.predict(pixels);

const topK = mobileNet.getTopKClasses(result, 3);

topK.forEach(x => {

console.log( `${x.value.toFixed(3)}: ${x.label}\n` );

});

mobileNet.dispose();

}

}

My questions are :

Is the convert python command correct ?

Did I miss something in my client to get the correct indexes ?

Thank you for your time and answers

If you need more informations, I would be glad to give them to you

Updates 10/03/2019

I did update tensorflowjs to 1.0.0 using npm

I saw that FrozenModel are now deprecated

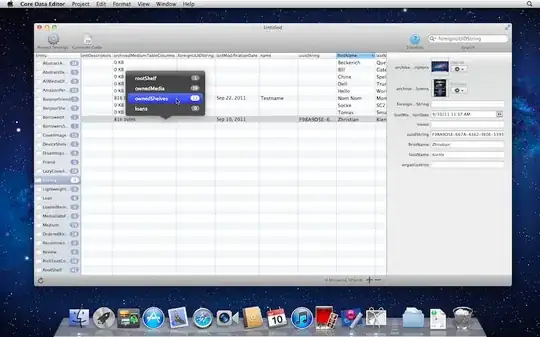

Exporting my custom vision model is giving me a model.pb and labels.txt files like this :

I have tried using these files with python everything works fine... I would like now to convert this model.pb file to model.json file to use it with tensorflowjs, for this I need to use tensorflowjs_converter, the problem is that the file structure to convert this savedmodel is invalid see : https://www.tensorflow.org/guide/saved_model#structure_of_a_savedmodel_directory

The only thing working is if I use frozen_model format in the converter and give as ouput node name : loss... like this tensorflowjs_converter --input_format=tf_frozen_model --output_node_names='loss' --output_json OUTPUT_JSON C:\python\tf_models\hairlength\model.pb C:\python\tf_models\exports\

These are the ouputs I get when running the above command :

then I load the model, here is my code to load and predict using the exported json model (I have use predict() and remove the input and ouput nodes like you advised me):

then I load the model, here is my code to load and predict using the exported json model (I have use predict() and remove the input and ouput nodes like you advised me):

import * as tf from '@tensorflow/tfjs';

import { GraphModel } from '@tensorflow/tfjs';

const MODEL = 'assets/models/hairlength/model.json';

// const INPUT_NODE_NAME = 'Placeholder';

// const OUTPUT_NODE_NAME = 'loss';

const PREPROCESS_DIVISOR = tf.scalar(255 / 2);

export class MobileNetHairLength {

private model: GraphModel;

private labels = ['long', 'mid', 'short'];

constructor() {}

async load() {

this.model = await tf.loadGraphModel(MODEL);

}

dispose() {

if (this.model) {

this.model.dispose();

}

}

/**

* Infer through MobileNet. This does standard ImageNet pre-processing before

* inferring through the model. This method returns named activations as well

* as softmax logits.

*

* @param input un-preprocessed input Array.

* @return The softmax logits.

*/

predict(input: tf.Tensor<tf.Rank>) {

const preprocessedInput = tf.div(

tf.sub(input.asType('float32'), PREPROCESS_DIVISOR),

PREPROCESS_DIVISOR);

const reshapedInput =

preprocessedInput.reshape([...preprocessedInput.shape]);

return this.model.predict(reshapedInput);

}

getTopKClasses(logits, topK: number) {

const predictions = tf.tidy(() => {

return tf.softmax(logits);

});

const values = predictions.dataSync();

predictions.dispose();

let predictionList = [];

for (let i = 0; i < values.length; i++) {

predictionList.push({value: values[i], index: i});

}

predictionList = predictionList

.sort((a, b) => {

return b.value - a.value;

})

.slice(0, topK);

console.log(predictionList);

return predictionList.map(x => {

return {label: this.labels[x.index], value: x.value};

});

}

}

And the calling class is this one :

import 'babel-polyfill';

import * as tf from '@tensorflow/tfjs';

import { MobileNetHairLength } from './mobilenet-hairlength';

export class PredictionHairLength {

constructor() {}

async predict(canvas) {

// Convert to tensor

const mobileNet = new MobileNetHairLength();

await mobileNet.load();

const imgTensor = tf.browser.fromPixels(canvas);

console.log(imgTensor);

// Init input with correct shape

const input = tf.zeros([1, 224, 224, 3]);

// Add img to input

input[0] = imgTensor;

console.log('Prediction');

const result = mobileNet.predict(input);

console.log(result);

const topK = mobileNet.getTopKClasses(result, 3);

topK.forEach(x => {

console.log( `${x.value.toFixed(3)}: ${x.label}\n` );

});

mobileNet.dispose();

}

}

Then sending a canvas element taken from a webcam stream gives me this error :

How could I run the converter command with the format 'saved model' since the file structure is wrong ?

Why do I get 'Failed to compile fragment shader error, infinity : undeclared identifier in tf-core.esm' ?

Thank you for your time and answers