The code:

import multiprocessing

print(f'num cpus {multiprocessing.cpu_count():d}')

import sys; print(f'Python {sys.version} on {sys.platform}')

def _process(m):

print(m) #; return m

raise ValueError(m)

args_list = [[i] for i in range(1, 20)]

if __name__ == '__main__':

with multiprocessing.Pool(2) as p:

print([r for r in p.starmap(_process, args_list)])

prints:

num cpus 8

Python 3.7.1 (v3.7.1:260ec2c36a, Oct 20 2018, 03:13:28)

[Clang 6.0 (clang-600.0.57)] on darwin

1

7

4

10

13

16

19

multiprocessing.pool.RemoteTraceback:

"""

Traceback (most recent call last):

File "/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/multiprocessing/pool.py", line 121, in worker

result = (True, func(*args, **kwds))

File "/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/multiprocessing/pool.py", line 47, in starmapstar

return list(itertools.starmap(args[0], args[1]))

File "/Users/ubik-mac13/Library/Preferences/PyCharm2018.3/scratches/multiprocess_error.py", line 8, in _process

raise ValueError(m)

ValueError: 1

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/Users/ubik-mac13/Library/Preferences/PyCharm2018.3/scratches/multiprocess_error.py", line 18, in <module>

print([r for r in p.starmap(_process, args_list)])

File "/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/multiprocessing/pool.py", line 298, in starmap

return self._map_async(func, iterable, starmapstar, chunksize).get()

File "/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/multiprocessing/pool.py", line 683, in get

raise self._value

ValueError: 1

Process finished with exit code 1

Increasing the number of processes in the pool to 3 or 4 prints all the odd numbers (possibly out of order):

1

3

5

9

11

7

13

15

17

19

while from 5 and above it prints all the range 1-19. So what happens here? Do the processes crash after a number of failures?

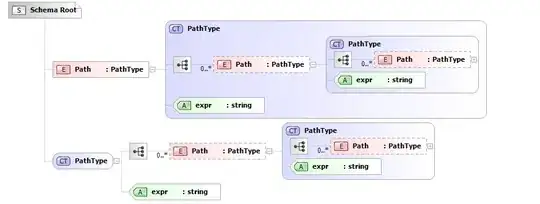

This is a toy example of course but it comes from a real code issue I had - having left a multiprocessing pool run for some days steadily the cpu use went down as if some processes were killed (note the cpu utilization going downhill on 03/04 and 03/06 while there was still lots of tasks to be run):

When the code terminated it presented me with one (and one only as here, while the processes were many more) multiprocessing.pool.RemoteTraceback - bonus question is this traceback random? In this toy example, it is usually ValueError: 1 but sometimes also other numbers appear. Does multiprocessing keep the first traceback from the first process that crashes?