Token-Level Comparison

If you want to know whether the annotation is different, you'll have to go through the documents token by token to compare POS tags, dependency labels, etc. Assuming the tokenization is the same for both versions of the text, you can compare:

import spacy

nlp = spacy.load('en')

doc1 = nlp("What's wrong with my NLP?")

doc2 = nlp("What's wring wit my nlp?")

for token1, token2 in zip(doc1, doc2):

print(token1.pos_, token2.pos_, token1.pos1 == token2.pos1)

Output:

NOUN NOUN True

VERB VERB True

ADJ VERB False

ADP NOUN False

ADJ ADJ True

NOUN NOUN True

PUNCT PUNCT True

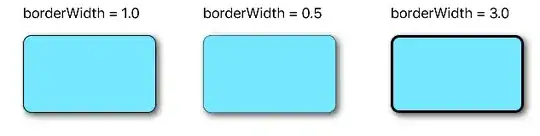

Visualization for Parse Comparison

If you want to visually inspect the differences, you might be looking for something like What's Wrong With My NLP?. If the tokenization is the same for both versions of the document, then I think you can do something like this to compare the parses:

First, you'd need to export your annotation into a supported format (some version of CoNLL for dependency parses), which is something textacy can do. (See: https://www.pydoc.io/pypi/textacy-0.4.0/autoapi/export/index.html#export.export.doc_to_conll)

from textacy import export

export.doc_to_conll(nlp('What's wrong with my NLP?'))

Output:

# sent_id 1

1 What what NOUN WP _ 2 nsubj _ SpaceAfter=No

2 's be VERB VBZ _ 0 root _ _

3 wrong wrong ADJ JJ _ 2 acomp _ _

4 with with ADP IN _ 3 prep _ _

5 my -PRON- ADJ PRP$ _ 6 poss _ _

6 NLP nlp NOUN NN _ 4 pobj _ SpaceAfter=No

7 ? ? PUNCT . _ 2 punct _ SpaceAfter=No

Then you need to decide how to modify things so you can see both versions of the token in the analysis. I'd suggest concatenating the tokens where there are variations, say:

1 What what NOUN WP _ 2 nsubj _ SpaceAfter=No

2 's be VERB VBZ _ 0 root _ _

3 wrong_wring wrong ADJ JJ _ 2 acomp _ _

4 with_wit with ADP IN _ 3 prep _ _

5 my -PRON- ADJ PRP$ _ 6 poss _ _

6 NLP_nlp nlp NOUN NN _ 4 pobj _ SpaceAfter=No

7 ? ? PUNCT . _ 2 punct _ SpaceAfter=No

vs. the annotation for What's wring wit my nlp?:

1 What what NOUN WP _ 3 nsubj _ SpaceAfter=No

2 's be VERB VBZ _ 3 aux _ _

3 wrong_wring wr VERB VBG _ 4 csubj _ _

4 with_wit wit NOUN NN _ 0 root _ _

5 my -PRON- ADJ PRP$ _ 6 poss _ _

6 NLP_nlp nlp NOUN NN _ 4 dobj _ SpaceAfter=No

7 ? ? PUNCT . _ 4 punct _ SpaceAfter=No

Then you need to convert both files to an older version of CoNLL supported by whatswrong. (The main issue is just removing the commented lines starting with #.) One existing option is the UD tools CoNLL-U to CoNLL-X converter: https://github.com/UniversalDependencies/tools/blob/master/conllu_to_conllx.pl, and then you have:

1 What what NOUN NOUN_WP _ 2 nsubj _ _

2 's be VERB VERB_VBZ _ 0 root _ _

3 wrong_wring wrong ADJ ADJ_JJ _ 2 acomp _ _

4 with_wit with ADP ADP_IN _ 3 prep _ _

5 my -PRON- ADJ ADJ_PRP$ _ 6 poss _ _

6 NLP_nlp nlp NOUN NOUN_NN _ 4 pobj _ _

7 ? ? PUNCT PUNCT_. _ 2 punct _ _

You can load these files (one as gold and one as guess) and compare them using whatswrong. Choose the format CoNLL 2006 (CoNLL 2006 is the same as CoNLL-X).

This python port of whatswrong is a little unstable, but also basically seems to work: https://github.com/ppke-nlpg/whats-wrong-python

Both of them seem to assume that we have gold POS tags, though, so that comparison isn't shown automatically. You could also concatenate the POS columns to be able to see both (just like with the tokens) since you really need the POS tags to understand why the parses are different.

For both the token pairs and the POS pairs, I think it would be easy to modify either the original implementation or the python port to show both alternatives separately in additional rows so you don't have to do the hacky concatenation.