I have implemented a gradient boosting decision tree to do a mulitclass classification. My custom loss functions look like this:

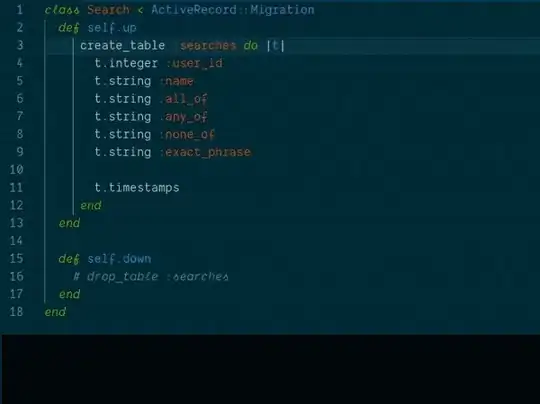

import numpy as np

from sklearn.preprocessing import OneHotEncoder

def softmax(mat):

res = np.exp(mat)

res = np.multiply(res, 1/np.sum(res, axis=1, keepdims=True))

return res

def custom_asymmetric_objective(y_true, y_pred_encoded):

pred = y_pred_encoded.reshape((-1, 3), order='F')

pred = softmax(pred)

y_true = OneHotEncoder(sparse=False,categories='auto').fit_transform(y_true.reshape(-1, 1))

grad = (pred - y_true).astype("float")

hess = 2.0 * pred * (1.0-pred)

return grad.flatten('F'), hess.flatten('F')

def custom_asymmetric_valid(y_true, y_pred_encoded):

y_true = OneHotEncoder(sparse=False,categories='auto').fit_transform(y_true.reshape(-1, 1)).flatten('F')

margin = (y_true - y_pred_encoded).astype("float")

loss = margin*10

return "custom_asymmetric_eval", np.mean(loss), False

Everything works, but now I want to adjust my loss function in the following way: It should "penalize" if an item is classified incorrectly, and a penalty should be added for a certain constraint (this is calculated before, let's just say the penalty is e.g. 0,05, so just a real number). Is there any way to consider both, the misclassification and the penalty value?