We have S1: 20 DTU 250GB SQL Azure database with following table

CREATE TABLE [dbo].[Reads]

(

[ReadId] [INT] IDENTITY(1,1) NOT NULL,

[LicenseNumber] [VARCHAR](50) NULL,

[Name] [VARCHAR](50) NULL,

[Serial] [VARCHAR](20) NULL,

[FirstSeenUtc] [DATETIME] NULL,

[LastSeenUtc] [DATETIME] NULL,

[Count] [INT] NOT NULL,

[Model] [VARCHAR](100) NULL,

[Make] [VARCHAR](100) NULL,

[TimestampUtc] [DATETIME] NOT NULL,

[PortNumber] [INT] NOT NULL,

[Code] [VARCHAR](50) NULL,

[Peak] [FLOAT] NULL,

CONSTRAINT [PK_Reads]

PRIMARY KEY CLUSTERED ([ReadId] ASC)

WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ON [PRIMARY]

) ON [PRIMARY]

This table has more than 80 million rows and simple query as

select count(1) from dbo.Reads

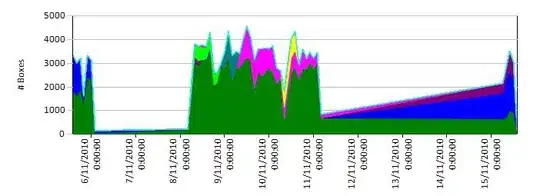

took 1 hour and 30 minutes to run. The load on the database in minimal with a process adding maybe around 1000 rows every minute. Currently nothing reading from this table and overall pretty much no load on the database.

I upgraded the database to S2: 50 DTU and the above query took 18 minutes to run.

I updated stats but didn't help much. I ran Brent Ozar's BlitzFirst stored procedure while the above query was running and it said database is maxing out data IO. Same database restored on my surface laptop returns row count in a second. Database performance tab does not have any recommendations.

S2: 50 DTU costs $75 per month and next option is S3: 100 DTU at $150 per month.

My plan was to create a database for every customer I sign up but at $150 per database per month I will go out of business pretty quick!

Is this SQL Azure's expected level of performance? Shouldn't this sort of basic query yield instantaneous result? Would moving to SQL Server on VM be better?

[Update 2019-03-10 11:35AM EST]

The table does have following IX

CREATE NONCLUSTERED INDEX [IX_Read_License_Code_TimeStamp] ON [dbo].[Reads]

(

[LicenseNumber] ASC,

[Code] ASC,

[TimestampUtc] ASC

)WITH (STATISTICS_NORECOMPUTE = OFF, DROP_EXISTING = OFF, ONLINE = OFF) ON [PRIMARY]

I see now that some of the columns can be safely changed into NOT NULL and could help improve things.

[Update: 2019-03-10 8:40PM EST]

I altered the table to make LicenseNumber and Code NOT NULL that took more than 6 hours. After that the count query ran in 1 minute and 32 seconds.

Following query returned results in 40 seconds

select Code, LicenseNumber, TimeStampUtc from dbo.Reads Where TimestampUtc >= '2019-03-10'