I am performing some execution time benchmarks for my implementation of quicksort. Out of 100 successive measurements on exactly the same input data it seems like the first call to quicksort takes roughly 10 times longer than all consecutive calls. Is this a consequence of the operating system getting ready to execute the program, or is there some other explanation? Moreover, is it reasonable to discard the first measurement when computing an average runtime?

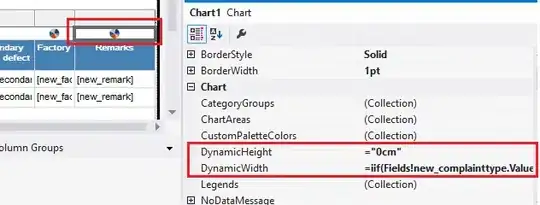

The below bar chart illustrates execution time (miliseconds) versus method call number. Each time the method is called it processes the exact same data.

To produce this particular graph the main method makes a call to quicksort_timer::time_fpi_quicksort(5, 100) whose implementation can be seen below.

static void time_fpi_quicksort(int size, int runs)

{

std::vector<int> vector(size);

for (int i = 0; i < runs; i++)

{

vector = utilities::getRandomIntVectorWithConstantSeed(size);

Timer timer;

quicksort(vector, ver::FixedPivotInsertion);

}

}

The getRandomIntVectorWithConstantSeed is implemented as follows

std::vector<int> getRandomIntVectorWithConstantSeed(int size)

{

std::vector<int> vector(size);

srand(6475307);

for (int i = 0; i < size; i++)

vector[i] = rand();

return vector;

}

CPU and Compilation

CPU: Broadwell 2.7 GHz Intel Core i5 (5257U)

Compiler Version: Apple LLVM version 10.0.0 (clang-1000.11.45.5)

Compiler Options: -std=c++17 -O2 -march=native