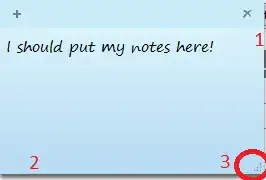

Which mean it uses 52 bits for fraction significand. But in the picture above, it seems like 0.57 in binary uses 54 bits.

JavaScript’s Number type, which is essentially IEEE 754 basic 64-bit binary floating-point, has 53-bit significands. 52 bits are encoded in the “trailing significand” field. The leading bit is encoded via the exponent field (an exponent field of 1-2046 means the leading bit is one, an exponent field of 0 means the leading bit is zero, and an exponent field of 2047 is used for infinity or NaN).

The value you see for .57 has 53 significant bits. The leading “0.” is produced by the toString operation; it is not part of the encoding of the number.

But why 0.55 + 1 = 1.55 (no loss) and 0.57 + 1 = 1.5699999999999998.

When JavaScript is formatting some Number x for display with its default rules, those rules say to produce the shortest decimal numeral (in its significant digits, not counting decorations like a leading “0.”) that, when converted back to the Number format, produces x. Purposes of this rule include (a) always ensuring the display uniquely identifies which exact Number value was the source value and (b) not using more digits than necessary to accomplish (a).

Thus, if you start with a decimal numeral such as .57 and convert it to a Number, you get some value x that is a result of the conversion having to round to a number representable in the Number format. Then, when x is formatted for display, you get the original number, because the rule that says to produce the shortest number that converts back to x naturally produces the number you started with.

(But that x does not exactly represent 0.57. The nearest double to 0.57 is slightly below it; see the decimal and binary64 representations of it on an IEEE double calculator).

On the other hand, when you perform some operation such as .57 + 1, you are doing some arithmetic that produces a number y that did not start as a simple decimal numeral. So, when formatting such a number for display, the rule may require more digits be used for it. In other words. when you add .57 and 1, the result in the Number format is not the same number as you get from 1.57. So, to format the result of .57 + 1, JavaScript has to use more digits to distinguish that number from the number you get from 1.57—they are different and must be displayed differently.

If 0.57 was exactly representable as a double, the pre-rounding result of the sum would be exactly 1.57, so 1 + 0.57 would round to the same double as 1.57.

But that's not the case, it's actually 1 + nearest_double(0.57) =

1.569999999999999951150186916493 (pre-rounding, not a double) which rounds down to

1.56999999999999984012788445398. These decimal representations of numbers have many more digits than we need to distinguish 1ulp (unit in the last place) of the significand, or even the 0.5 ulp max rounding error.

1.57 rounds to ~1.57000000000000006217248937901, so that's not an option for printing the result of 1 + 0.57. The decimal string needs to distinguish the number from adjacent binary64 values.

It just so happens that the rounding that occurs in .55 + 1 yields the same number one gets from converting 1.55 to Number, so displaying the result of .55 + 1 produces “1.55”.