Using the EFK Stack on Kubernetes (Minikube). Have an asp.net core app using Serilog to write to console as Json. Logs DO ship to Elasticsearch, but they arrive unparsed strings, into the "log" field, this is the problem.

This is the console output:

{

"@timestamp": "2019-03-22T22:08:24.6499272+01:00",

"level": "Fatal",

"messageTemplate": "Text: {Message}",

"message": "Text: \"aaaa\"",

"exception": {

"Depth": 0,

"ClassName": "",

"Message": "Boom!",

"Source": null,

"StackTraceString": null,

"RemoteStackTraceString": "",

"RemoteStackIndex": -1,

"HResult": -2146232832,

"HelpURL": null

},

"fields": {

"Message": "aaaa",

"SourceContext": "frontend.values.web.Controllers.HomeController",

"ActionId": "0a0967e8-be30-4658-8663-2a1fd7d9eb53",

"ActionName": "frontend.values.web.Controllers.HomeController.WriteTrace (frontend.values.web)",

"RequestId": "0HLLF1A02IS16:00000005",

"RequestPath": "/Home/WriteTrace",

"CorrelationId": null,

"ConnectionId": "0HLLF1A02IS16",

"ExceptionDetail": {

"HResult": -2146232832,

"Message": "Boom!",

"Source": null,

"Type": "System.ApplicationException"

}

}

}

This is the Program.cs, part of Serilog config (ExceptionAsObjectJsonFormatter inherit from ElasticsearchJsonFormatter):

.UseSerilog((ctx, config) =>

{

var shouldFormatElastic = ctx.Configuration.GetValue<bool>("LOG_ELASTICFORMAT", false);

config

.ReadFrom.Configuration(ctx.Configuration) // Read from appsettings and env, cmdline

.Enrich.FromLogContext()

.Enrich.WithExceptionDetails();

var logFormatter = new ExceptionAsObjectJsonFormatter(renderMessage: true);

var logMessageTemplate = "[{Timestamp:HH:mm:ss} {Level:u3}] {Message:lj}{NewLine}{Exception}";

if (shouldFormatElastic)

config.WriteTo.Console(logFormatter, standardErrorFromLevel: LogEventLevel.Error);

else

config.WriteTo.Console(standardErrorFromLevel: LogEventLevel.Error, outputTemplate: logMessageTemplate);

})

Using these nuget pkgs:

- Serilog.AspNetCore

- Serilog.Exceptions

- Serilog.Formatting.Elasticsearch

- Serilog.Settings.Configuration

- Serilog.Sinks.Console

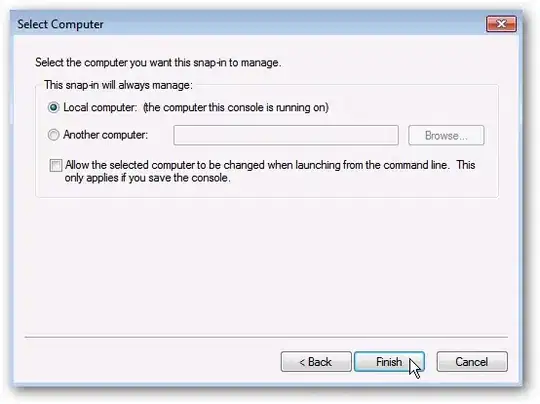

This is how it looks like in Kibana

And this is configmap for fluent-bit:

fluent-bit-filter.conf:

[FILTER]

Name kubernetes

Match kube.*

Kube_URL https://kubernetes.default.svc:443

Kube_CA_File /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Kube_Token_File /var/run/secrets/kubernetes.io/serviceaccount/token

Merge_Log On

K8S-Logging.Parser On

K8S-Logging.Exclude On

fluent-bit-input.conf:

[INPUT]

Name tail

Path /var/log/containers/*.log

Parser docker

Tag kube.*

Refresh_Interval 5

Mem_Buf_Limit 5MB

Skip_Long_Lines On

fluent-bit-output.conf:

[OUTPUT]

Name es

Match *

Host elasticsearch

Port 9200

Logstash_Format On

Retry_Limit False

Type flb_type

Time_Key @timestamp

Replace_Dots On

Logstash_Prefix kubernetes_cluster

fluent-bit-service.conf:

[SERVICE]

Flush 1

Daemon Off

Log_Level info

Parsers_File parsers.conf

fluent-bit.conf:

@INCLUDE fluent-bit-service.conf

@INCLUDE fluent-bit-input.conf

@INCLUDE fluent-bit-filter.conf

@INCLUDE fluent-bit-output.conf

parsers.conf:

But I also tried https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/output/elasticsearch/fluent-bit-configmap.yaml with my modifications.

I used Helm to install fluentbit with helm install stable/fluent-bit --name=fluent-bit --namespace=logging --set backend.type=es --set backend.es.host=elasticsearch --set on_minikube=true

I also get alot of the following errors:

log:{"took":0,"errors":true,"items":[{"index":{"_index":"kubernetes_cluster-2019.03.22","_type":"flb_type","_id":"YWCOp2kB4wEngjaDvxNB","status":400,"error":{"type":"mapper_parsing_exception","reason":"failed to parse","caused_by":{"type":"json_parse_exception","reason":"Duplicate field '@timestamp' at [Source: org.elasticsearch.common.bytes.BytesReference$MarkSupportingStreamInputWrapper@432f75a7; line: 1, column: 1248]"}}}}]}

and

log:[2019/03/22 22:38:57] [error] [out_es] could not pack/validate JSON response stream:stderr

as I can see in Kibana.