I'm using Dapper to read data from SQL Server. I have a SQL statement that returns a long Json result but the issue is this result being split into 3 rows with 2033 characters max per row, then Dapper can't parse the returned result because it's invalid Json.

How to prevent this splitting or how to make Dapper deal with it?

This is my code:

SqlMapper.ResetTypeHandlers();

SqlMapper.AddTypeHandler(new JsonTypeHandler<List<Product>>());

const string sql = @"SELECT

*,

(SELECT * FROM Balance b

WHERE p.SKU = b.SKU

FOR JSON PATH) AS [Balances]

FROM Product p

WHERE SKU IN @SKUs

FOR JSON PATH";

var connection = new SqlConnection("myconnection");

return connection.QuerySingleAsync<List<Product>>(sql, new{SKUs = new[] {"foo", "bar"}} });

And the code of TypeHandler:

public class JsonTypeHandler<T> : SqlMapper.TypeHandler<T>

{

public override T Parse(object value)

{

return JsonConvert.DeserializeObject<T>(value.ToString());

}

public override void SetValue(IDbDataParameter parameter, T value)

{

parameter.Value = JsonConvert.SerializeObject(value);

}

}

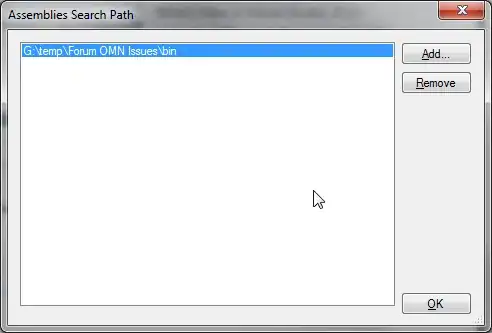

And here is how I run this SQL in DataGrip

Edit:

Here is the error message:

Edit:

Here is the error message:

Newtonsoft.Json.JsonSerializationException : Unexpected end when deserializing object. Path '[0].Balances[4].WarehouseId', line 1, position 2033.