This question is kinda complimentary to "Share credentials between native app and web site", as we aim to share secrets in the opposite direction.

TL;TR: how can we securely share the user's authentication/authorization state from a Web Browser app to a Native Desktop app, so the same user doesn't have to authenticate additionally in the Native app?

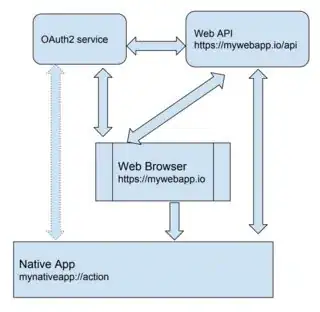

TS;WM: We are working on the following architecture: a Web Application (with some HTML front-end UI running inside a Web Browser of user's choice), a Native Desktop Application (implementing a custom protocol handler), a Web API and an OAuth2 service, as on the picture.

Initially, the user is authenticated/authorized in the Web Browser app against the OAuth2 service, using the Authorization Code Grant flow.

Then, the Web Browser content can do one-way talking to the Native app, when the user clicks on our custom protocol-based hyperlinks. Basically, it's done to establish a secure bidirectional back-end communication channel between the two, conducted via the Web API.

We believe that, before acting upon any requests received via a custom protocol link from the Web Browser app, the Native app should first authenticate the user (who is supposed to be the same person using this particular desktop session). We think the Native app should as well use the Authorization Code flow (with PKCE) to obtain an access token for the Web API. Then it should be able to securely verify the origin and integrity of the custom protocol data, using the same Web API.

However, it can be a hindering experience for the user to have to authenticate twice, first in the Web Browser and second in the Native app, both running side-by-side.

Thus, the question: is there a way to pass an OAuth2 access token (or any other authorization bearer) from the Web Browser app to the Native app securely, without compromising the client-side security of this architecture? I.e., so the Native app could call the Web API using the identity from the Web Browser, without having to authenticate the same user first?

Personally, I can't see how we can safely avoid that additional authentication flow. Communication via a custom app protocol is insecure by default, as typically it's just a command line argument the Native app is invoked with. Unlike a TLS channel, it can be intercepted, impersonated etc. We could possibly encrypt the custom protocol data. Still, whatever calls the Native app would have to make to decrypt it (either to a client OS API or some unprotected calls to the Web API), a bad actor/malware might be able to replicate those, too.

Am I missing something? Is there a secure platform-specific solution? The Native Desktop app is an Electron app and is designed to be cross-platform. Most of our users will run this on Windows using any supported browser (including even IE11), but ActiveX or hacking into a running web browser instance is out of question.