I use Mask-R-CNN to train my data with it. When i use TensorBoard to see the result, i have the loss, mrcnn_bbox_loss, mrcnn_class_loss, mrcnn_mask_loss, rpn_bbox_loss, rpn_class_loss and all the same 6 loss for the validation: val_loss, val_mrcnn_bbox_loss etc.

I want to know what is each loss exactly.

Also i want to know if the first 6 losses are the train loss or what are they? If they aren't the train loss, how can i see the train loss?

My guess is:

loss: it's all the 5 losses in summary (but i don't know how TensorBoard summarizes it).

mrcnn_bbox_loss: is the size of the bounding box correct or not?

mrcnn_class_loss: is the class correct? is the pixel correctly assign to the class?

mrcnn_mask_loss: is the shape of the instance correct or not? is the pixel correctly assign to the instance?

rpn_bbox_loss: is the size of the bbox correct?

rpn_class_loss: is the class of the bbox correct?

But i am pretty sure this is not right...

And are some lossed irrelevant if i have only 1 class? For example only the background and 1 other class?

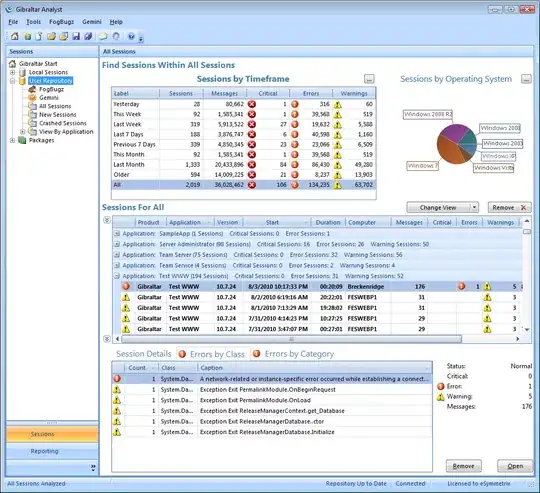

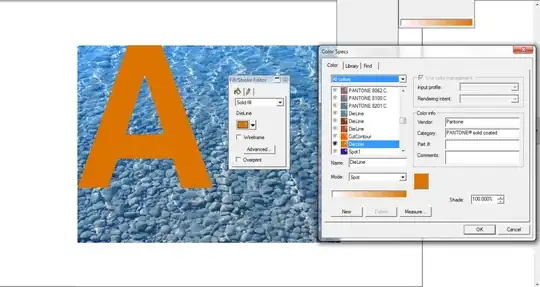

My data have only the background and 1 other class and this is my result on TensorBoard:

My prediction is ok, but i don't know why some losses from my validation is going up and down at the end... I thought it has to be first only down and after overfitting only up. The prediction i used is the green line on TensorBoard with the most epochs. I am not sure if my Network is overfitted, therfore i am wondering why some losses in the validation look how they look...