I am comparing two training runs of a tf.Estimator.Estimator model fed by a tf.data.Dataset iterator. The training is handled by tf.train_and_evaluate()

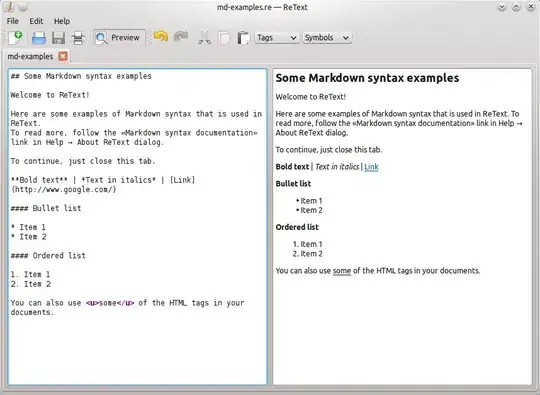

When I look at the traces of a single training step I noticed that the GPU training is dominated by the IteratorGetNext call which takes 4.5 seconds. The same call when trained using cpus only takes only 100us. See the following photos of the traces:

cpu training:

gpu training:

What could be causing this, and how can I improve the speed of the GPUs IteratorGetNext?