I believe this is a simple one, and I am willing to learn more. The thing is that I want to crawl the website titles via URL. The purpose of this is predicting the online news popularity and the data is from the UCI Machine Learning Repository. Here's the link.

I follow the tutorial of Scrapy and change the code in "quotes spider" as following. After I run "scrapy crawl quotes" in the terminal, I used "scrapy crawl quotes -o quotes.json" to save all the title in JSON.

There are 158 missing. I have 39,486 URL but 39,644 Website Titles. In addition, the order of each website does not fit each URL. For example, The final Title corresponds to the third last URL. Could you please help me identify the problems?

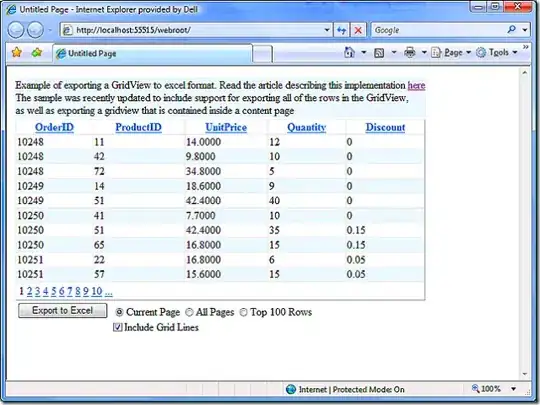

Here's the Result

I tried to use "Beautiful soup" in Jupyter Notebook, but it was slow and cannot tell if the code is still running or not.

import scrapy

import pandas as pd

df = pd.read_csv("/Users/.../OnlineNewsPopularity.csv",delim_whitespace=False)

url = df['url']

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = url.values.tolist()

def parse(self, response):

for quote in response.css('h1.title'):

yield {

'Title': quote.css('h1.title::text').extract_first(),

}