I'm trying to figure out why my 15 GB table balloons to 182 GB when I run a simple query on it.

First I read the table into Spark from Redshift. When I tell Spark to do a simple count on the table, it works fine. However, when I try to create a table I get all kinds of YARN failures and ultimately some of my tasks have shuffle spill memory of 182 GB.

Here is the problematic query (I've changed some of the names):

CREATE TABLE output

SELECT

*

FROM inputs

DISTRIBUTE BY id

SORT BY snapshot_date

What is going on? How could the shuffle spill exceed the total size of the input? I'm not doing a cartesian join, or anything like that. This is a super simple query.

I'm aware that Red Hat Linux (I use EMR on AWS) has virtual memory issues, since I came across that topic here, but I've added the recommended config classification=yarn-site,properties=[yarn.nodemanager.vmem-check-enabled=false]

to my EMR properties and the issue persists.

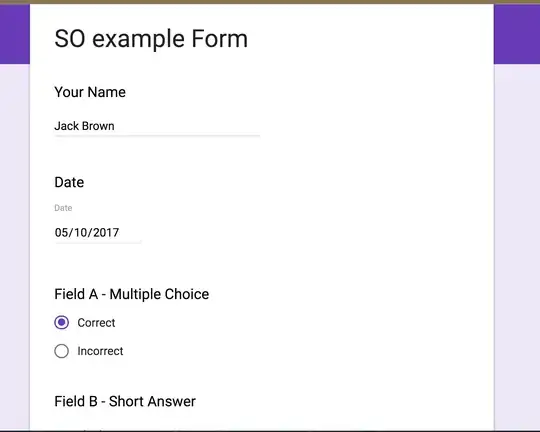

Here is a screenshot from the Spark UI, if it helps: