TLDR:

numpy.cos() works 30% longer on a particular numbers (exactly 24000.0 for example). Adding a small delta (+0.01) causes numpy.cos() to work as usual.

I have no idea why.

I stumbled across a strange problem during my work with numpy. I was checking cache work and accidentally made a wrong graph - how numpy.cos(X) time depends on X. Here is my modified code (copied from my Jupyter notebook):

import numpy as np

import timeit

st = 'import numpy as np'

cmp = []

cmp_list = []

left = 0

right = 50000

step = 1000

# Loop for additional average smoothing

for _ in range(10):

cmp_list = []

# Calculate np.cos depending on its argument

for i in range(left, right, step):

s=(timeit.timeit('np.cos({})'.format(i), number=15000, setup=st))

cmp_list.append(int(s*1000)/1000)

cmp.append(cmp_list)

# Calculate average times

av=[np.average([cmp[i][j] for i in range(len(cmp))]) for j in range(len(cmp[0]))]

# Draw the graph

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

plt.plot(range(left, right, step), av, marker='.')

plt.show()

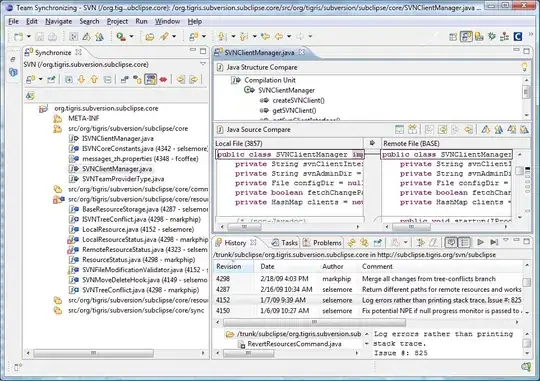

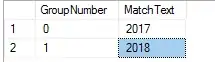

The graph looked like this:

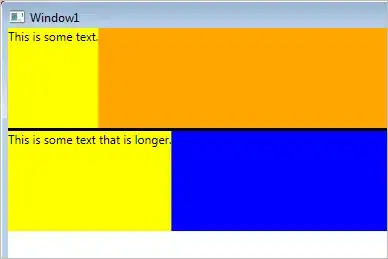

Firstly I thought it was just a random glitch. I recalculated my cells but the result was nearly the same. So I started to play with step parameters, with number of calculations and averages list length. But everything had no effect to this number:

And even closer:

After it, range was useless (it can't steps with floats) so I calculated np.cos manually:

print(timeit.timeit('np.cos({})'.format(24000.01),number=5000000,setup=st))

print(timeit.timeit('np.cos({})'.format(24000.00),number=5000000,setup=st))

print(timeit.timeit('np.cos({})'.format(23999.99),number=5000000,setup=st))

And the result was:

3.4297256958670914

4.337243619374931

3.4064380447380245

np.cos() calculates exactly 24000.00 on 30% longer, than 24000.01!

There were another strange numbers like it (somewhere around 500000, I don't remember exactly).

I looked through numpy documentation, through its source code, and it had nothing about this effect. I know that trigonometric functions use several algorithms depends on value size, precision etc, but it is confusing for me that exact numbers can be calculated way longer.

Why np.cos() has this strange effect? Is it some kind of processor side-effect (because numpy.cos uses C-functions that depends on processors)? I have Intel Core i5 and Ubuntu installed, if it will help for someone.

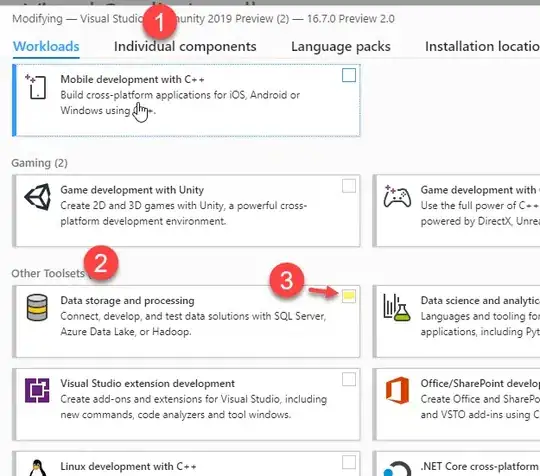

Edit 1: I tried to reproduce it on another machine with AMD Ryzen 5. Results are just unstable. Here is graphs for three sequential runs of the same code:

import numpy as np

import timeit

s = 'import numpy as np'

times = []

x_ranges = np.arange(23999, 24001, 0.01)

for x in x_ranges:

times.append(timeit.timeit('np.cos({})'.format(x), number=100000, setup=s))

# ---------------

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

plt.plot(x_ranges, times)

plt.show()

Well, there is some patterns (like mostly consistent left part and non-consistent right part), but it differs a lot from Intel processors runs. Looks like it is really just special aspects of processors, and AMD behaviour is much more predictable in its indeterminism :)

P.S. @WarrenWeckesser thanks for ``np.arange``` function. It is really useful, but it changes nothing in results, as expected.