Search engines usually keep this a secret, since this is a large part of how the magic is done (i.e the proprietary bit), so I can only make educated guesses.

Actual logical/theory stuff

However, I think we need to start with the appreciation that it's likely not two completely independent scores we're combining. We're probably using all of the data in all of the places, instead of hand-picking what and where. Let's look at a potential example:

query: "dog"

returned objects to rank:

1. "dogs are awesome! find out more about owning a dog today!"

Query relevance: 9/10

From: some obscure blog that no-one cares about (2/10 according to PageRank)

2. "doge memes for you. Get the finest memes - doge and more!"

Query relevance: 7/10 (only 1 letter difference! Could be a typo, maybe?)

From: 9gag, first search result for anything trendy-related, so it must be good (9/10 according to PageRank)

However you try to bend and skew and weight the data, 9gag will end up on top despite being obviously wrong (sorry about the ridiculous example). Clearly, it's not as simply as shoving these two numbers together.

Speculation time

(notice how this section is longer than the previous one. Take it with a grain of salt.)

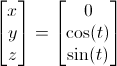

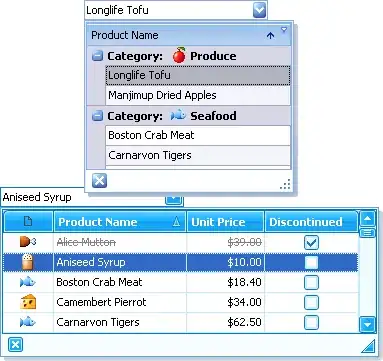

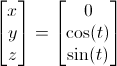

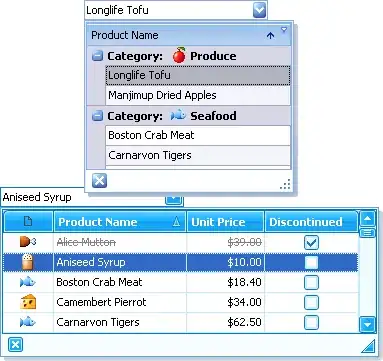

Imagine the entire web as a graph (like a graph theory graph), or a 'map' of sorts, with interconnected stuff. The distances between points are the PageRank distances (some measure of how close-knit PageRank deems the two sites, where higher represents larger distance and in turn lower PageRank score - therefore, pr_n=1/sum(length of all edges connecting to n)), while the 'weights' inside the circles are the relevance to your query. Our job is to find the numbers which are relatively close to their peers (i.e high PageRank score), but also have higher weights. We can then use an algorithm of your choosing to extract the ones that are best. But this way, we're still only getting the results we got before, where dogs and doge are only 1 letter apart. The reasoning is, we're ignoring the query score of the other pages. Therefore, what we'll do is as follows:

- Let's say we start with this graph:

(yes I realise it's not complete and missing some connections. But I have reason to believe @Joebevo is a human, who would appreciate a visually-interpretable graph and maths that doesn't go on for half an hour.)

Blue represents PageRank distance (i.e how far the pages are from each other, so lower average PageRank distance to all connected nodes represents higher PageRank score).

- We'll take the most-connected node first: the blue node. We'll look at all of its surroundings, and take our score of '8' and subdivide it up, weighted according to the PageRank scores around it. These new numbers are represented by purple text.

- Next, we divide these numbers by the nodes they are connected to (division since the lower the PageRank distance, the better, but with the relevance, higher is better), giving those nodes a new value (represented by white). This is finally a ranking score! (although, it is not the final score, since we have not taken a bunch of distances into account yet):

How can we see what we have done makes sense? Well, look back at the first graph image. The green node was small and far away, and so it ended up getting a low score in this graph. Meanwhile, the purple node was big and (relatively) close to the blue, so it scored the highest. The red node was even closer, but due to its tiny size, it came only second place.

Mathematically, we've not done anything complex - we've simply worked out an 'average' of sorts of the two scores, weighted by the importance of the middle node. This is the sort of algorithm that confuses 'doge' with 'dog'. The red node knows nothing about the orange, all they care about is the blue. To fix this, we need to repeat the process.

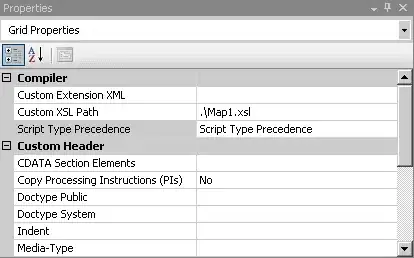

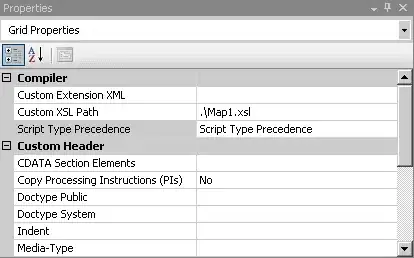

To decide which node to go to next, we'll use this algorithm (it's based on the theory behind the one used in Dijkstra, the most efficient path-finding algorithm):

- So, we'll go onto the node with the next-most connections. In this case, they're all tied (3), so we'll go onto the highest-scoring node (note that if the scores are also tied, which one you choose makes no difference to the output), so purple. We'll simply repeat the process, to get: (new distances in orange, new sizes in teal)

Note that with the white-text nodes, we can multiply the distance instead of divide, since we've already normalised it to a positive scale (jargon for 'made both axis increase as result gets more accurate, instead of one increasing and another decreasing').

The only node we've not updated or used in an update (that still counts as touched, since a some connection between it and a sister node was altered) since the last update is orange, so we'll go there now. (using purple for new nodes, green for new lines)

and then we'll go to red (green nodes, black lines):

and finally (before we halt) to green (red nodes, red lines):

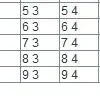

So, to look through the results:

- Purple, blue and orange seem perfectly ordered, based on common sense! Of course, the numbers are a lot different from a simple average, and this is good because it:

- Considers all other nodes in the calculation, not just that one node and its one PageRank score

- Is better for comparison with more datapoints, as we are taking a lot of other things into consideration

- What's happened with red and green, however, seems very confusing. They've suddenly shrunk relative to the others, despite red even starting off as the no.2 choice! Is this a mistake?

Let's analyse that second bit. It is indeed quite confusing at first, but we need to look at what we've actually just done on an abstract level. Imagine it like a circuit: there's current flowing from each of the cells/ammeters/powerpacks to the others, but through wires with certain resistance. We're taking each node's value, and spreading it to its neighbour based on the distance. Another analogy is like being the ice-man, carrying ice around to houses in the hot summer. You'll gladly take an equal amount of ice to everyone, but a lot melts on the way to everyone's house. Therefore, everyone gets quantities proportional to their distance (I don't love this analogy, though, since it gives the idea that numbers can just 'leak out' from the nodes)

So, now let's go through step-by-step. Since we go straight to the purple-orange axis, via the red-green axis, we're essentially using them as holding points. Therefore, we're not using them for the first two steps for what they are. This is because, as I mentioned at the start of this section, we've not actually got a complete graph. This would fix it:

Now, not everything needs to be taken account of: only the square root of the subset (i.e 50*sqrt(2)% of the nodes) need to be connected: those which are separated by 1 or 2 nodes, but no more. Otherwise, things would get too clunky, as the algorithm for deciding the next node would get double-recursive - it's bad enough as it is! (to be fair, the mathematical justification is there too, but it's beyond the scope of this answer (but essentially, the numbers would get less close to the 'optimal' answer)).

In conclusion, your notion of a query-independent is technically correct, but it is important to note that it is not combined fully independently from the query. It depends on the other results to form a sort of weighted average, to make sure that two results that are completely on opposite ends of the spectrum are not given the same score (e.g relevance 2 + PR 8 vs relevance 8 + PR 2). An irrelevant query is obviously no more relevant because it has a high PageRank score, and a high PageRank score is useless if it is only obtained as a result of being linked to query-irrelevant pages (e.g although 9gag is linked to from a lot of places, if you see that none of those places have anything to do with dogs, why would that high PageRank score mean anything?).

I know this answer is long, but I hope it answers your question quite clearly. Note that this is just one algorithm used, but it's enough to put 99% of devs off ever attempting a search engine.