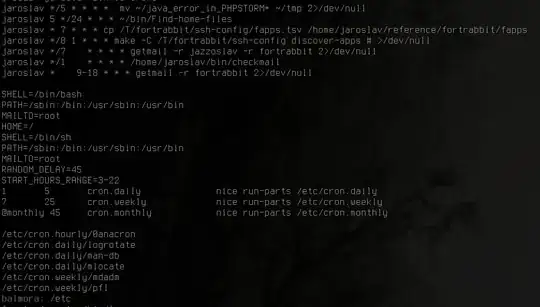

So I have trained an object recognition neural network (YOLOv3) to detect bounding boxes around the license plates of car pictures shot at a variety of tilted and straight angles and the network does it pretty reliably. However now I want to extract the the license plate parallelogram from the bounding box that surrounds it utilizing image processing and without having to train another neural network to do so. sample images:

I have tried performing edge and contour detection using OpenCV built-in functions as in the following minimal code but only managed to succeed on a small subset of images this way:

import cv2

import matplotlib.pyplot as plt

import numpy as np

def auto_canny(image, sigma=0.25):

# compute the median of the single channel pixel intensities

v = np.median(image)

# apply automatic Canny edge detection using the computed median

lower = int(max(0, (1.0 - sigma) * v))

upper = int(min(255, (1.0 + sigma) * v))

edged = cv2.Canny(image, lower, upper)

# return the edged image

return edged

# Load the image

orig_img = cv2.imread(input_file)

img = orig_img.copy()

dim1,dim2, _ = img.shape

# Calculate the width and height of the image

img_y = len(img)

img_x = len(img[0])

#Split out each channel

blue, green, red = cv2.split(img)

mn, mx = 220, 350

# Run canny edge detection on each channel

blue_edges = auto_canny(blue)

green_edges = auto_canny(green)

red_edges = auto_canny(red)

# Join edges back into image

edges = blue_edges | green_edges | red_edges

contours, hierarchy = cv2.findContours(edges.copy(), cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

cnts=sorted(contours, key = cv2.contourArea, reverse = True)[:20]

hulls = [cv2.convexHull(cnt) for cnt in cnts]

perims = [cv2.arcLength(hull, True) for hull in hulls]

approxes = [cv2.approxPolyDP(hulls[i], 0.02 * perims[i], True) for i in range(len(hulls))]

approx_cnts = sorted(approxes, key = cv2.contourArea, reverse = True)

lengths = [len(cnt) for cnt in approx_cnts]

approx = approx_cnts[lengths.index(4)]

#check the ratio of the detected plate area to the bounding box

if (cv2.contourArea(approx)/(img.shape[0]*img.shape[1]) > .2):

cv2.drawContours(img, [approx], -1, (0,255,0), 1)

plt.imshow(img);plt.show()

here's some examples results :

(The top row images are the results of edge detection stage)

Successfuls:

Unsuccessfuls:

Kinda successfuls:

And the case where no quadrilateral/parallelogram found but the polygon with the highest area found is drawn:

all these results are with the exact same set of parameters (thresholds, ... etc)

I have also tried to apply Hough transform using cv2.HoughLines but i dont know why vertically tilted lines are always missed no matter how low i set the accumulator threshold. Also when i lower the threshold i get these diagonal lines out of nowhere:

and the code i used for drawling Hough lines:

lines = cv2.HoughLines(edges,1,np.pi/180,20)

for i in range(len(lines)):

for rho,theta in lines[i]:

a = np.cos(theta)

b = np.sin(theta)

x0 = a*rho

y0 = b*rho

x1 = int(x0 + 1000*(-b))

y1 = int(y0 + 1000*(a))

x2 = int(x0 - 1000*(-b))

y2 = int(y0 - 1000*(a))

cv2.line(img,(x1,y1),(x2,y2),(0,0,255),2)

plt.imshow(img);plt.show()

Is it really this hard to achieve a high success rate using only image processing techniques? Of course machine learning would solve this problem like a piece of cake but I think it would be an overkill and i dont have the annotated data for it anyways.