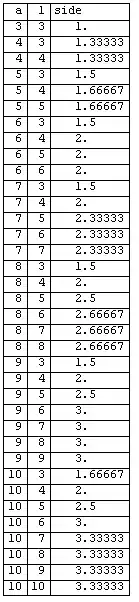

I want to do some large matrix multiplications using multiprocessing.Pool.

Suddenly, when the dimension is higher than 50, it takes an extremely long computation time.

Is there any easy way to be faster?

Here, I don't want to use shared memory like RawArray, because my original code randomly generate the matrix for each time.

The sample code is as follows.

import numpy as np

from time import time

from multiprocessing import Pool

from functools import partial

def f(d):

a = int(10*d)

N = int(10000/d)

for _ in range(N):

X = np.random.randn(a,10) @ np.random.randn(10,10)

return X

# Dimensions

ds = [1,2,3,4,5,6,8,10,20,35,40,45,50,60,62,64,66,68,70,80,90,100]

# Serial processing

serial = []

for d in ds:

t1 = time()

for i in range(20):

f(d)

serial.append(time()-t1)

# Parallel processing

parallel = []

for d in ds:

t1 = time()

pool = Pool()

for i in range(20):

pool.apply_async(partial(f,d), args=())

pool.close()

pool.join()

parallel.append(time()-t1)

# Plot

import matplotlib.pyplot as plt

plt.title('Matrix multiplication time with 10000/d repetitions')

plt.plot(ds,serial,label='serial')

plt.plot(ds,parallel,label='parallel')

plt.xlabel('d (dimension)')

plt.ylabel('Total time (sec)')

plt.legend()

plt.show()

Due to the total computation cost of f(d) is the same for all d, the parallel processing time should be equal.

But the actual output is not.

System info:

Linux-4.15.0-47-generic-x86_64-with-debian-stretch-sid

3.6.8 |Anaconda custom (64-bit)| (default, Dec 30 2018, 01:22:34)

[GCC 7.3.0]

Intel(R) Core(TM) i9-7940X CPU @ 3.10GHz

NOTE I want to use parallel computation as a complicated internal simulation (like

@), not sending data to the child process.