I am not entirely convinced that the spec argument inside parallel::makeCluster is explicitly the max number of cores (actually, logical processors) to use. I've used the value of detectCores()-1 and detectCores()-2 in the spec argument on some computationally expensive processes and the CPU and # cores used==detectCores(), despite specifying to leave a little room (here, leaving 1 logical processor free for other processes).

The below example crude as I've not captured any quantitative outputs of the core usage. Please suggest edit.

You can visualize core usage by monitoring via e.g., task manager whilst running a simple example:

no_cores <- 5

cl<-makeCluster(no_cores)#, outfile = "debug.txt")

parallel::clusterEvalQ(cl,{

library(foreach)

foreach(i = 1:1e5) %do% {

print(sqrt(i))

}

})

stopCluster(cl)

#browseURL("debug.txt")

Then, rerun using e.g., ncores-1:

no_cores <- parallel::detectCores()-1

cl<-makeCluster(no_cores)#, outfile = "debug.txt")

parallel::clusterEvalQ(cl,{

library(foreach)

foreach(i = 1:1e5) %do% {

print(sqrt(i))

}

})

stopCluster(cl)

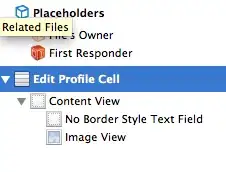

All 16 cores appear to engage despite no_cores being specified as 15:

Based on the above example and my very crude (visual only) analysis...it looks like it is possible that the spec argument tells the max number of cores to use throughout the process, but it doesn't appear the process is running on multiple cores simultaneously. Being a novice parallelizer, perhaps a more appropriate example is necessary to reject/support this?

The package documentation suggests spec is "A specification appropriate to the type of cluster."

I've dug into the relevant parallel documentation and and cannot determine what, exactly, spec is doing. But I am not convinced the argument necessarily controls the max number of cores (logical processors) to engage.

Here is where I think I could be wrong in my assumptions: If we specify spec as less than the number of the machine's cores (logical processors) then, assuming no other large processes are running, the machine should never achieve no_cores times 100% CPU usage (i.e., 1600% CPU usage max with 16 cores).

However, when I monitor the CPUs on a Windows OS using Resource Monitor), it does appear that there are, in fact, no_cores Images for Rscript.exe running.