I am trying to figure out the pros and cons of asynchronous and synchronous HTTP request processing. I am using the Dropwizard with Jersey as my framework. The test is comparing the asynchronous and synchronous HTTP request processing, this is my code

@Path("/")

public class RootResource {

ExecutorService executor;

public RootResource(int threadPoolSize){

executor = Executors.newFixedThreadPool(threadPoolSize);

}

@GET

@Path("/sync")

public String sayHello() throws InterruptedException {

TimeUnit.SECONDS.sleep(1L);

return "ok";

}

@GET

@Path("/async")

public void sayHelloAsync(@Suspended final AsyncResponse asyncResponse) throws Exception {

executor.submit(() -> {

try {

doSomeBusiness();

asyncResponse.resume("ok");

} catch (InterruptedException e) {

e.printStackTrace();

}

});

}

private void doSomeBusiness() throws InterruptedException {

TimeUnit.SECONDS.sleep(1L);

}

}

The sync API will run in the worker thread maintained by the Jetty and the async API will mainly run in the customs thread pool. And here is my result by Jmeter

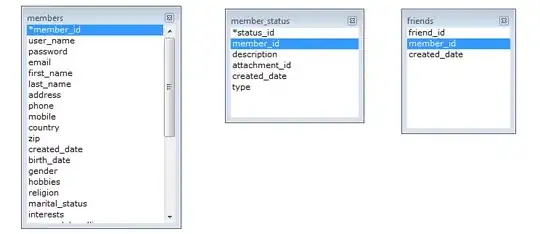

Test 1, 500 Jetty worker thread, /sync endpoint

Test 2, 500 custom thread, /async endpoint

As the result shows, there are no much differences between the two approaches.

As the result shows, there are no much differences between the two approaches.

My question would be: What's the differences between these two approaches, and which pattern should I use in which scenario?

Related topic : Performance difference between Synchronous HTTP Handler and Asynchronous HTTP Handler

update

I run the test with 10 delays as suggested

- sync-500-server-thread

- async-500-workerthread