I have lots of models that ain't unwrapped (they don't have UV coordinates). They are quite complex to unwrap them. Thus, I decided to texture them using a seamless cubemap:

[VERT]

attribute vec4 a_position;

varying vec3 texCoord;

uniform mat4 u_worldTrans;

uniform mat4 u_projTrans;

...

void main()

{

gl_Position = u_projTrans * u_worldTrans * a_position;

texCoord = vec3(a_position);

}

[FRAG]

varying vec3 texCoord;

uniform samplerCube u_cubemapTex;

void main()

{

gl_FragColor = textureCube(u_cubemapTex, texCoord);

}

It works, but the result is quite weird due to texturing depends on the vertices position. If my model is more complex than a cube or sphere, I see visible seams and low resolution of the texture on some parts of the object.

Reflection is mapped good on the model, but it has a mirror effect.

Reflection:

[VERT]

attribute vec3 a_normal;

varying vec3 v_reflection;

uniform mat4 u_matViewInverseTranspose;

uniform vec3 u_cameraPos;

...

void main()

{

mat3 normalMatrix = mat3(u_matViewInverseTranspose);

vec3 n = normalize(normalMatrix * a_normal);

//calculate reflection

vec3 vView = a_position.xyz - u_cameraPos.xyz;

v_reflection = reflect(vView, n);

...

}

How to implement something like a reflection, but with “sticky” effect, which means that it’s as if the texture is attached to a certain vertex (not moving). Each side of the model must display its own side of the cubemap, and as a result it should look like a common 2D texturing. Any advice will be appreciated.

UPDATE 1

I summed up all comments and decided to calculate cubemap UV. Since I use LibGDX, some names may differ from OpenGL ones.

Shader class:

public class CubemapUVShader implements com.badlogic.gdx.graphics.g3d.Shader {

ShaderProgram program;

Camera camera;

RenderContext context;

Matrix4 viewInvTraMatrix, viewInv;

Texture texture;

Cubemap cubemapTex;

...

@Override

public void begin(Camera camera, RenderContext context) {

this.camera = camera;

this.context = context;

program.begin();

program.setUniformMatrix("u_matProj", camera.projection);

program.setUniformMatrix("u_matView", camera.view);

cubemapTex.bind(1);

program.setUniformi("u_textureCubemap", 1);

texture.bind(0);

program.setUniformi("u_texture", 0);

context.setDepthTest(GL20.GL_LEQUAL);

context.setCullFace(GL20.GL_BACK);

}

@Override

public void render(Renderable renderable) {

program.setUniformMatrix("u_matModel", renderable.worldTransform);

viewInvTraMatrix.set(camera.view);

viewInvTraMatrix.mul(renderable.worldTransform);

program.setUniformMatrix("u_matModelView", viewInvTraMatrix);

viewInvTraMatrix.inv();

viewInvTraMatrix.tra();

program.setUniformMatrix("u_matViewInverseTranspose", viewInvTraMatrix);

renderable.meshPart.render(program);

}

...

}

Vertex:

attribute vec4 a_position;

attribute vec2 a_texCoord0;

attribute vec3 a_normal;

attribute vec3 a_tangent;

attribute vec3 a_binormal;

varying vec2 v_texCoord;

varying vec3 v_cubeMapUV;

uniform mat4 u_matProj;

uniform mat4 u_matView;

uniform mat4 u_matModel;

uniform mat4 u_matViewInverseTranspose;

uniform mat4 u_matModelView;

void main()

{

gl_Position = u_matProj * u_matView * u_matModel * a_position;

v_texCoord = a_texCoord0;

//CALCULATE CUBEMAP UV (WRONG!)

//I decided that tm_l2g mentioned in comments is u_matView * u_matModel

v_cubeMapUV = vec3(u_matView * u_matModel * vec4(a_normal, 0.0));

/*

mat3 normalMatrix = mat3(u_matViewInverseTranspose);

vec3 t = normalize(normalMatrix * a_tangent);

vec3 b = normalize(normalMatrix * a_binormal);

vec3 n = normalize(normalMatrix * a_normal);

*/

}

Fragment:

varying vec2 v_texCoord;

varying vec3 v_cubeMapUV;

uniform sampler2D u_texture;

uniform samplerCube u_textureCubemap;

void main()

{

vec3 cubeMapUV = normalize(v_cubeMapUV);

vec4 diffuse = textureCube(u_textureCubemap, cubeMapUV);

gl_FragColor.rgb = diffuse;

}

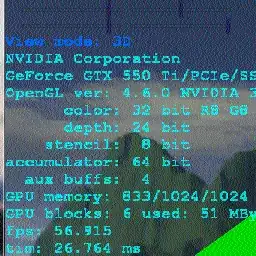

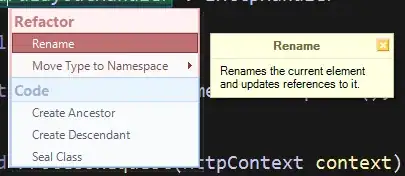

The result is completely wrong:

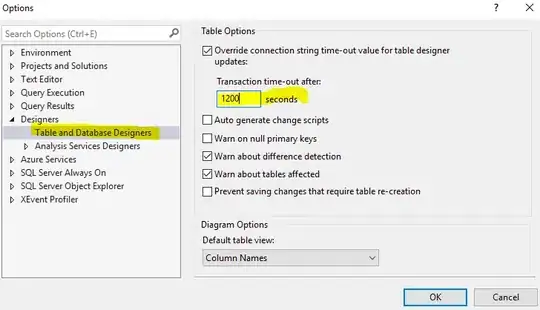

I expect something like that:

UPDATE 2

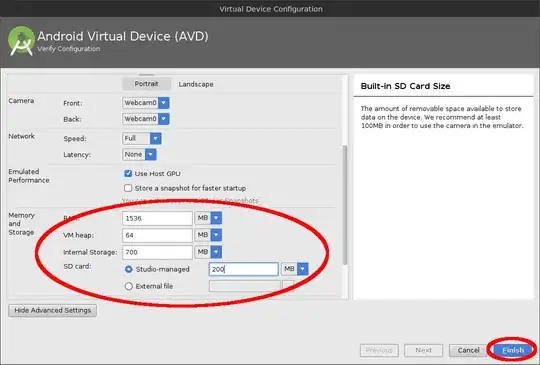

The texture looks stretched on the sides and distorted in some places if I use vertices position as a cubemap coordinates in the vertex shader:

v_cubeMapUV = a_position.xyz;

I uploaded euro.blend, euro.obj and cubemap files to review.