I put some objects in AR space using ARKit and SceneKit. That works well. Now I'd like to add an additional camera (SCNCamera) that is placed elsewhere in the scene attached and positioned by a common SCNNode. It is oriented to show me the current scene from an other (fixed) perspective.

Now I'd like to show this additional SCNCamera feed on i.Ex. a SCNPlane (as the diffuse first material) - Like a TV screen. Of course I am aware that it will only display the SceneKit content which stays in the camera focus and not rest of the ARKit image (which is only possible by the main camera of course). A simple colored background then would be fine.

I have seen tutorials that describes, how to play a video file on a virtual display in ARSpace, but I need a realtime camera feed from my own current scene.

I defined this objects:

let camera = SCNCamera()

let cameraNode = SCNNode()

Then in viewDidLoad I do this:

camera.usesOrthographicProjection = true

camera.orthographicScale = 9

camera.zNear = 0

camera.zFar = 100

cameraNode.camera = camera

sceneView.scene.rootNode.addChildNode(cameraNode)

Then I call my setup function to place the virtual Display next to all my AR stuff, position the cameraNode as well (pointing in the direction where objects stay in the scene)

cameraNode.position = SCNVector3(initialStartPosition.x, initialStartPosition.y + 0.5, initialStartPosition.z)

let cameraPlane = SCNNode(geometry: SCNPlane(width: 0.5, height: 0.3))

cameraPlane.geometry?.firstMaterial?.diffuse.contents = cameraNode.camera

cameraPlane.position = SCNVector3(initialStartPosition.x - 1.0, initialStartPosition.y + 0.5, initialStartPosition.z)

sceneView.scene.rootNode.addChildNode(cameraPlane)

Everything compiles and loads... The display shows up at the given position, but it stays entirely gray. Nothing is displayed at all from the SCNCamera I put in the scene. Everything else in the AR scene works well, I just don't get any feed from that camera.

Hay anyone an approach to get this scenario working?

To even better visualize, I add some more print screens.

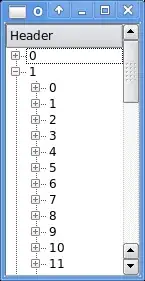

The following shows the Image trough the SCNCamera according to ARGeo's input. But it takes the whole screen, instead of displaying its contents on a SCNPlane, like I need.

The next Print screen actually shows the current ARView result as I got it using my posted code. As you can see, the gray Display-Plane remains gray - it shows nothing.

The last print screen is a photomontage, showing the expected result, as I'd like to get.

How could this be realized? Am I missing something fundamental here?