I am trying to create a diagram using ggplot2. There are several very small values to be displayed and a few larger ones. I'd like to display all of them in an appropriate way using logarithmic scaling. This is what I do:

plotPointsPre <- ggplot(data = solverEntries, aes(x = val, y = instance,

color = solver, group = solver))

...

finalPlot <- plotPointsPre + coord_trans(x = 'log10') + geom_point() +

xlab("costs") + ylab("instance")

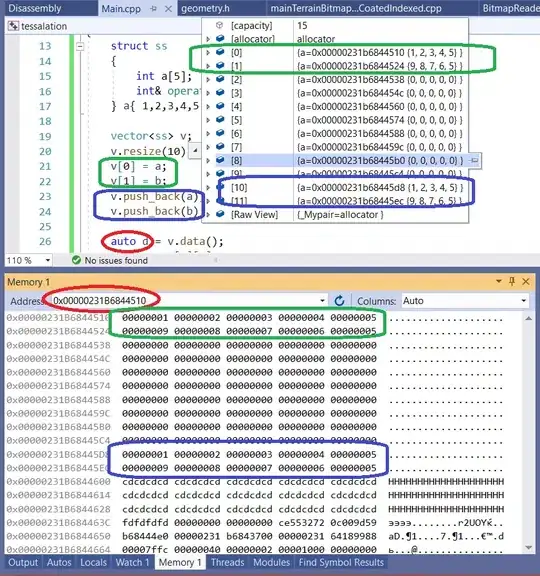

This is the result:

It is just the same as without coord_trans(x = 'log10').

However, if I use it with the y-axis:

How do I achieve the logarithmic scaling on the x-axis? Besides, it is not about the x-axis, if I switch the values of x and y, then it works on the x-axis and no longer on the y-axis. So there seems to be some problem with the displayed values. Does anybody have an idea how to fix this?

Edit - Here's the used data contained in solverEntries:

solverEntries <- data.frame(instance = c(1, 1, 1, 1, 2, 2, 2, 2, 3, 3, 3, 3, 4, 4, 4, 4, 5, 5, 5, 5, 6, 6, 6, 6, 7, 7, 7, 7, 8, 8, 8, 8, 9, 9, 9, 9, 10, 10, 10, 10, 11, 11, 11, 11, 12, 12, 12, 12, 13, 13, 13, 13, 14, 14, 14, 14, 15, 15, 15, 15, 16, 16, 16, 16, 17, 17, 17, 17, 18, 18, 18, 18, 19, 19, 19, 19, 20, 20, 20, 20),

solver = c(4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1, 4, 3, 2, 1),

time = c(1, 24, 13, 6, 1, 41, 15, 5, 1, 26, 16, 5, 1, 39, 7, 4, 1, 28, 11, 3, 1, 31, 12, 3, 1, 38, 20, 3, 1, 37, 10, 4, 1, 25, 11, 3, 1, 32, 18, 4, 1, 27, 21, 3, 1, 23, 22, 3, 1, 30, 17, 2, 1, 36, 8, 3, 1, 37, 19, 4, 1, 40, 21, 3, 1, 29, 11, 4, 1, 33, 10, 3, 1, 34, 9, 3, 1, 35, 14, 3),

val = c(6553.48, 6565.6, 6565.6, 6577.72, 6568.04, 7117.14, 6578.98, 6609.28, 6559.54, 6561.98, 6561.98, 6592.28, 6547.42, 7537.64, 6549.86, 6555.92, 6546.24, 6557.18, 6557.18, 6589.92, 6586.22, 6588.66, 6588.66, 6631.08, 6547.42, 7172.86, 6569.3, 6582.6, 6547.42, 6583.78, 6547.42, 6575.28, 6555.92, 6565.68, 6565.68, 6575.36, 6551.04, 6551.04, 6551.04, 6563.16, 6549.86, 6549.86, 6549.86, 6555.92, 6544.98, 6549.86, 6549.86, 6561.98, 6558.36, 6563.24, 6563.24, 6578.98, 6566.86, 7080.78, 6570.48, 6572.92, 6565.6, 7073.46, 6580.16, 6612.9, 6557.18, 7351.04, 6562.06, 6593.54, 6547.42, 6552.3, 6552.3, 6558.36, 6553.48, 6576.54, 6576.54, 6612.9, 6555.92, 6560.8, 6560.8, 6570.48, 6566.86, 6617.78, 6572.92, 6578.98))