I've read Prometheus how to handle counters on server and I've been digging around on the web, but I still don't see a method for accomplishing what I'm trying to do. Prometheus may not be the best tool for the job, I'm not sure.

Every day, we receive N request packets from customers. We've instrumented a counter that counts the number of packets. I can use rate and increase, those show change over time and are somewhat helpful, but we are really interested in the overall counts, and we want to disregard restarts.

What I would like to see is a graph that starts at 0 and over time shows the number of responses that were seen, never goes down, accounts for resets.

I know the total itself that disregards the resets is available somewhere, since "instant" queries seem able to return that. I have yet to find any query variant though that allows me to perform this graph.

TLDR; I want to see the absolute count over time

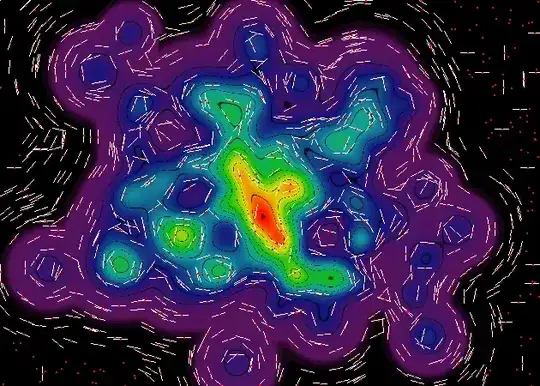

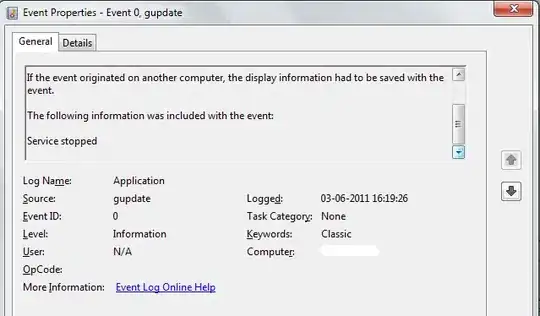

EDIT: Alin - when I try your solution over any time range, I see what I was seeing before:

Even at a low resolution - I don't really care about precision too much - just want it to be +-100. I just want to see the overall trend without these spikes/decreases.