I want to evaluate a logistic regression model (binary event) using two measures: 1. model.score and confusion matrix which give me a 81% of classification accuracy 2. ROC Curve (using AUC) which gives back a 50% value

Are these two result in contradiction? Is that possible I'missing something but still can't find it

y_pred = log_model.predict(X_test)

accuracy_score(y_test , y_pred)

cm = confusion_matrix( y_test,y_pred )

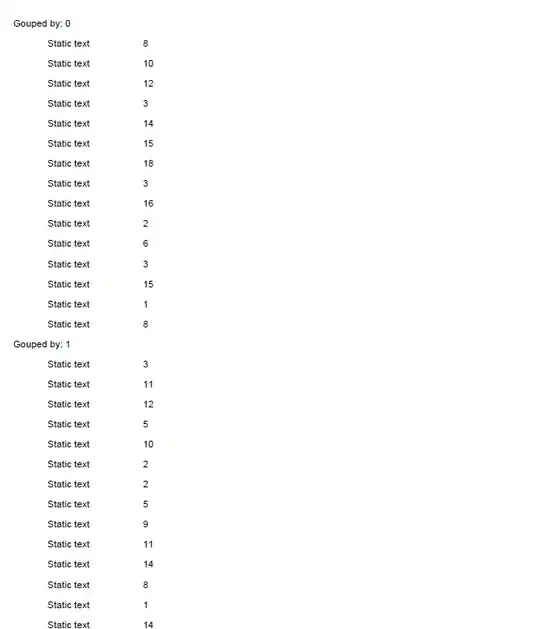

y_test.count()

print (cm)

tpr , fpr, _= roc_curve( y_test , y_pred, drop_intermediate=False)

roc = roc_auc_score( y_test ,y_pred)