I'm trying to import the below excel file present in the azure blob storage into sql server

EXCEL File

Query

SELECT *

FROM OPENROWSET(

BULK 'container/testfile.xlsx',

DATA_SOURCE = 'ExternalSrcImport',

FORMATFILE='container/test.fmt', FORMATFILE_DATA_SOURCE = 'ExternalSrcImport',

codepage = 1252,

FIRSTROW = 1

) as data

Format file

10.0

4

1 SQLCHAR 0 7 "\t" 1 DepartmentID ""

2 SQLCHAR 0 100 "\t" 2 Name SQL_Latin1_General_CP1_CI_AS

3 SQLCHAR 0 100 "\t" 3 GroupName SQL_Latin1_General_CP1_CI_AS

4 SQLCHAR 0 24 "\r\n" 4 ModifiedDate ""

Illustration of Format File

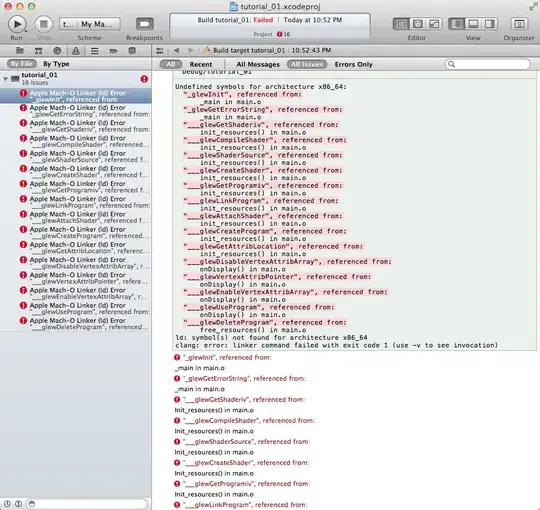

when I execute the query, I'm getting the below error

Msg 4863, Level 16, State 1, Line 210 Bulk load data conversion error (truncation) for row 1, column 1 (DepartmentID).

looks like field terminator in the format file is not working, any ideas to import the file ?