I've computed a proximity matrix for my ~1000 data points using Random Forest, but my results visualizing this matrix using sklearn's MDS are quite strange and difficult to reason about.

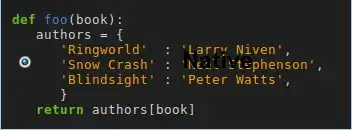

The code I used to process my data is below:

data_url = "https://raw.githubusercontent.com/ychennay/ychennay.github.io/master/KAG_conversion_data.csv"

# read data into memory and drop columns

data_string = requests.get(data_url).content

conversions_df = pd.read_csv(StringIO(data_string.decode("utf-8"))

)

ad_ids = conversions_df["ad_id"].tolist()

conversions_df = pd.read_csv(StringIO(data_string.decode("utf-8"))

).drop(columns=COLUMNS_TO_DROP)

conversions_df["bias"] = 1 # add a bias/intercept column

# define the target

y = conversions_df[TARGET]

# define features

X = conversions_df.loc[:, ~conversions_df.columns.isin(TARGET)]

# using dictionary convert columns into categorical data types

convert_dict = {'gender': "category",

'interest':"category",

"age": "category"}

conversions_df = conversions_df.astype(convert_dict)

dummified_data = pd.get_dummies(conversions_df, drop_first=True) # get dummy features for categorical variables

TARGET = ["Approved_Conversion"]

y = dummified_data[TARGET].values.reshape(-1)

X = dummified_data.loc[:, ~dummified_data.columns.isin(TARGET)]

conversions_df = conversions_df.astype(convert_dict)

After this preprocessing, I run it through my RandomForestRegressor attempting to predict Approved_Conversions as the target:

from sklearn.ensemble import RandomForestRegressor

B = 500

rf = RandomForestRegressor(n_estimators=B)

rf.fit(X, y)

final_positions = rf.apply(X)

proximity_matrix = np.zeros((len(X), len(X)))

# adapted implementation found here: h

# https://stackoverflow.com/questions/18703136/proximity-matrix-in-sklearn-ensemble-randomforestclassifier

for tree_idx in range(B):

proximity_matrix += np.equal.outer(final_positions[:,tree_idx],

final_positions[:,tree_idx]).astype(float)

# divide by the # of estimators

proximity_matrix /= B

distance_matrix = 1 - proximity_matrix

distance_matrix = pd.DataFrame(distance_matrix, columns=ad_ids, index=ad_ids)

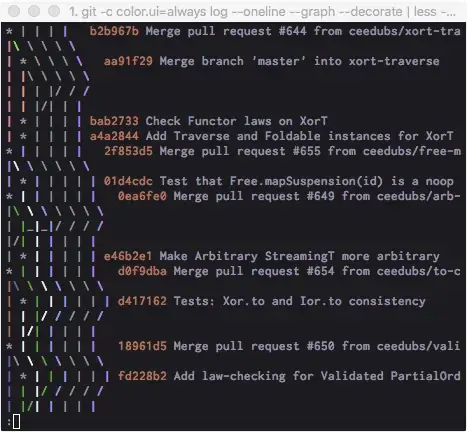

However, when I plot my MDS visualization, the visualization is perfectly round, and not very informative. I expected some coherent clusters in the data that correspond with groups of data points that are the most similar:

from sklearn.manifold import MDS

# from sklearn.decomposition import PCA

mds = MDS(n_components=2,dissimilarity='precomputed')

reduced_dimensions = mds.fit_transform(distance_matrix)

If I try using MDS with the proximity_matrix instead, it's more or less the same pattern:

I'm not the most familiar with MDS, but I can't explain why this algorithm is giving me the worst results when most of the articles online have recommended using it for visualizing distance/similarity matrices.

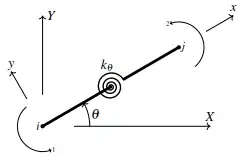

I've also validated that the actual results of the matrix make sense. For instance, when I get the most similar ads to a particular Facebook ad (the dataset is paid Facebook campaigns performance), I get results that do indeed make sense (the ad I inputted is highlighted, and the most similar results show up below):

Can anyone give me some pointers for what I might be doing wrong? If I reduce the dimensions using PCA, I get a bit more "normal" results (at least that variance is extending in both principal components):