I'm having trouble trying to fit an average curved line through my data in order to find the length. I have a lot of X, Y points in a large pandas dataframe that looks something like:

x = np.asarray([731501.13, 731430.24, 731360.29, 731289.36, 731909.72, 731827.89,

731742. , 731657.74, 731577.95, 731502.64, 731430.39, 731359.12,

731287.3 , 731214.21, 732015.59, 731966.88, 731902.67, 731826.31,

731743.79, 731660.94, 731581.29, 731505.4 , 731431.95, 732048.71,

732026.66, 731995.46, 731952.18, 731894.29, 731823.58, 731745.16,

732149.61, 732091.53, 732052.98, 732026.82, 732005.17, 731977.63,

732691.84, 732596.62, 732499.45, 732401.62, 732306.18, 732218.35,

732141.82, 732080.91, 732038.21, 732009.08, 733023.08, 732951.99,

732873.32, 732787.51])

y = np.asarray([7873771.69, 7873705.34, 7873638.03, 7873571.73, 7874082.33,

7874027.2 , 7873976.22, 7873923.58, 7873866.35, 7873804.53,

7873739.58, 7873673.62, 7873608.23, 7873544.15, 7874286.21,

7874197.15, 7874123.96, 7874063.21, 7874008.78, 7873954.69,

7873897.31, 7873836.09, 7873772.38, 7874564.62, 7874448.23,

7874341.23, 7874246.59, 7874166.93, 7874100.4 , 7874041.77,

7874912.56, 7874833.09, 7874733.62, 7874621.43, 7874504.65,

7874393.89, 7875225.26, 7875183.85, 7875144.42, 7875105.69,

7875064.49, 7875015.5 , 7874954.94, 7874878.36, 7874783.13,

7874674. , 7875476.18, 7875410.05, 7875351.67, 7875300.61])

The x and y are map view coordinates and I want to calculate the length. I can code the Euclidean distance but because the points are scattered and aren't one point after another, I'm having trouble trying to fit a moving line through this. I've tried polyfit but this mainly produces a straight line even with higher deg, e.g:

from numpy.polynomial.polynomial import polyfit

import numpy as np

import matplotlib.pyplot as plt

z = np.polyfit(x,y,10)

p = np.poly1d(z)

plt.scatter(x,y, marker='x')

plt.scatter(x, p(x), marker='.')

plt.show()

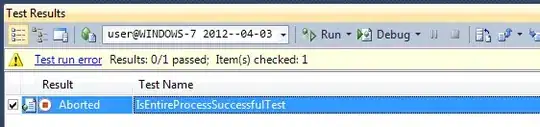

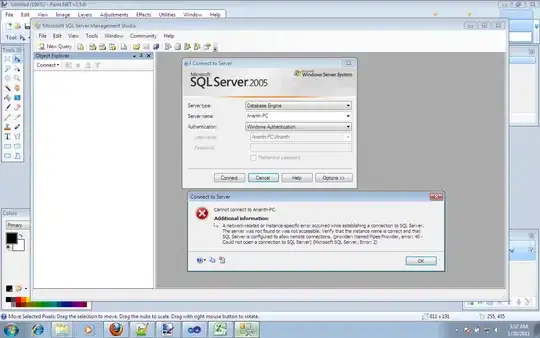

This is to demonstrate what I mean 1

Any help would be greatly appreciated!