Here is my question, I hope someone can help me to figure it out..

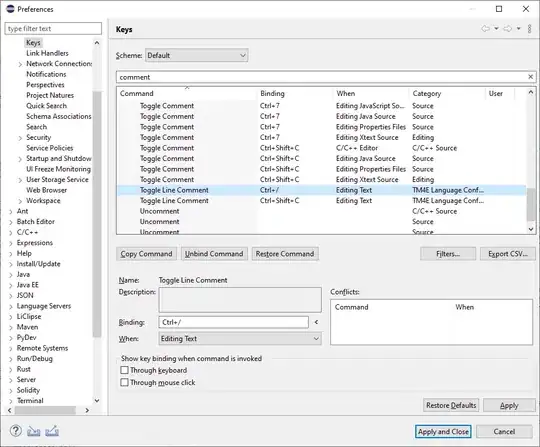

To explain, there are more than 10 categorical columns in my data set and each of them has 200-300 categories. I want to convert them into binary values. For that I used first label encoder to convert string categories into numbers. The Label Encoder code and the output is shown below.

After Label Encoder, I used One Hot Encoder From scikit-learn again and it is worked. BUT THE PROBLEM IS, I need column names after one hot encoder. For example, column A with categorical values before encoding. A = [1,2,3,4,..]

It should be like that after encoding,

A-1, A-2, A-3

Anyone know how to assign column names to (old column names -value name or number) after one hot encoding. Here is my one hot encoding and it's output;

I need columns with name because I trained an ANN, but every time data comes up I cannot convert all past data again and again. So, I want to add just new ones every time. Thank anyway..