Your code example is not written with async in mind. You do not need to Parallel.ForEachat all. HttpClient.GetAsync is already async there there is no point wrapping it in CPU bound tasks.

private readonly _httpClient = new HttpClient();

var tasks = new List<Task>();

foreach(var url in urls)

{

var task = DoWork(url);

tasks.Add(task);

}

await Task.WhenAll(tasks);

foreach(var task in tasks)

{

if (task.Exception != null)

Console.WriteLine(task.Exception.Message);

}

public async Task DoWork(string url)

{

var json = await _httpClient.GetAsync(url);

// do something with json

}

While Parallel.ForEach() is a more efficient version of looping and using Task.Run() it should really only be used for Cpu Bound work (Task.Run Etiquette and Proper Usage). Calling a URL is not CPU bound work, it's I/O work (or more technically referred to as IO Completion Port work).

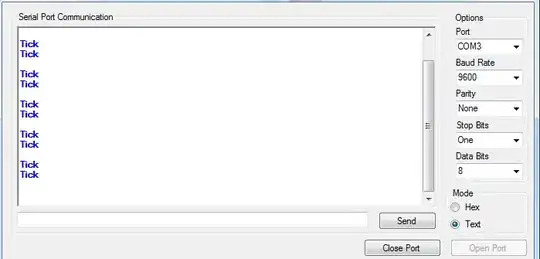

YOU'RE USING HTTPCLIENT WRONG AND IT IS DESTABILIZING YOUR SOFTWARE

Thanks for the HttpClient link. Wow. The fix seems like such an anti-pattern.

While it may seem like an anti-pattern, it's because the solution provided actually is an anti-pattern. The HttpClient is using external resources to do it's job, so it should be disposed (it implements IDisposable) but at the same time it should be used as a singleton. That poses the problem because there is no clean way to dispose of a singleton used as static property/field on a class. However, since it was written by mirosoft, we should not be concerned that we always need to dispose of objects we create if the documentation states otherwise.

Since you point out that neither Parallel.ForEach nor Task.Run is suited for HttpClient work because it's I/O bound, what would you recommend?

Async/Await

I've added the million portion to the question

So you need to limit the number of parallel tasks so:

var maximumNumberofParallelOperations = 1;

foreach(var url in urls)

{

var task = DoWork(url);

tasks.Add(task);

while (allTasks.Count(t => !t.IsCompleted) >= maximumNumberofParallelOperations )

{

await Task.WhenAny(allTasks);

}

}