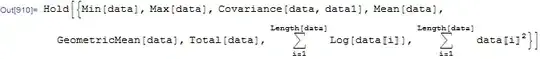

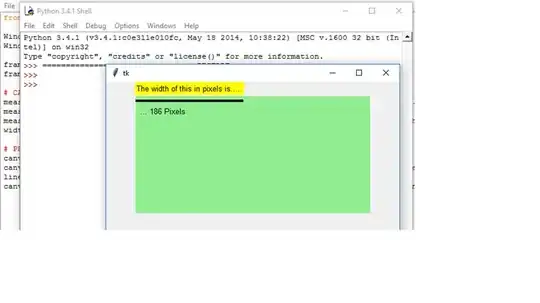

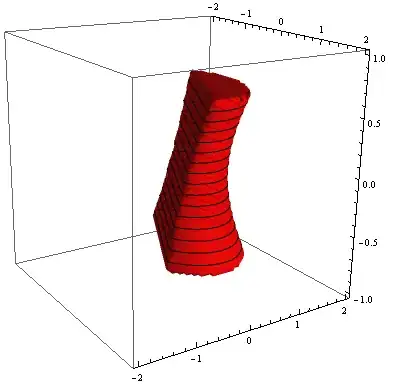

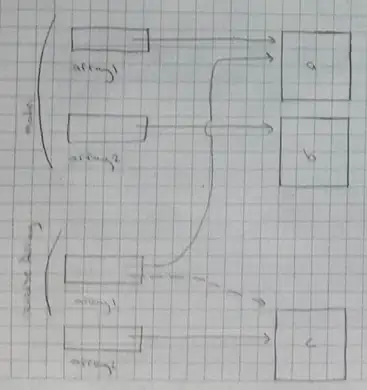

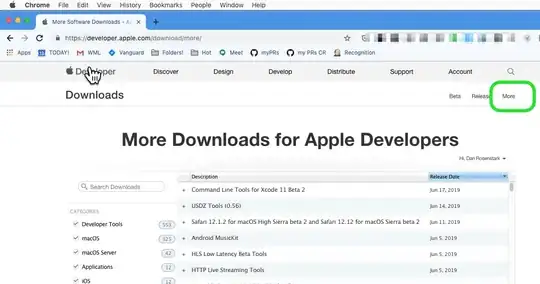

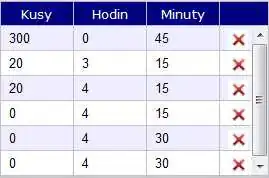

I have a jpeg from where I want to crop a portion containing graph (the one in the bottom portion).

As of now I used this code to achieve the same:

from PIL import Image

img = Image.open(r'D:\aakash\graph2.jpg')

area = (20, 320, 1040, 590)

img2 = img.crop(area)

# img.show()

img2.show()

But I achieved this by guessing the x1, y1, x2, y2 multiple times to arrive at this (guess work).

I'm totally novice in image cropping based on some logic. How can I successfully crop all graphs to create separate images given the positions are same?

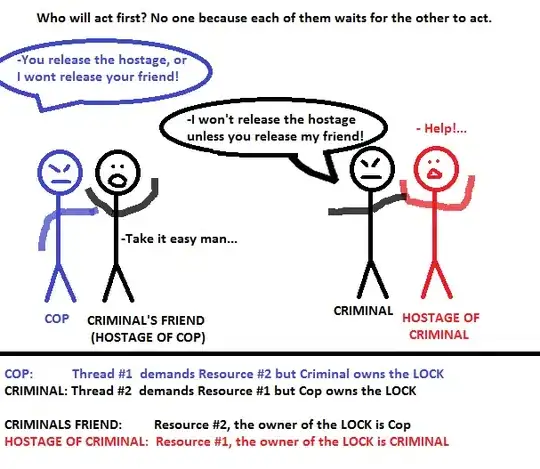

Update: I believe, it is not a possible duplicate of that problem because, even though logically that's same, but the way the clustering logic will work is different. In that question, there are only 2 vertical white lines to divide, but here, there's two horizontal and two vertical lines, and I hardly have a clue on how to use KMeans to solve this kind of image clustering.

Help from someone who's an expert with sklearn's KMeans to solve this kind of problem shall be highly appreciated.