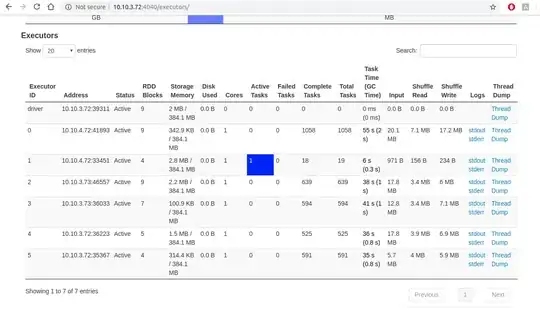

I am using spark-streaming 2.2.1 on production and in this application i read the data from RabbitMQ and do the further processing and finally save it in the cassandra. So, i am facing this strange issue where number of tasks are not evenly distributed amongst executors on one of the nodes. I restarted the streaming but still issue persist.

As you can see on 10.10.4.72 i have 2 executors. The one running on 41893 port has completed approx. double the number of tasks on rest of the nodes (10.10.3.73 and 10.10.3.72). where as executor running on 33451 port on 10.10.4.72 has completed only 18 tasks. And this issue persist even if i restart my spark-streaming.

Edit question After 12 hours still as you can see in the below image the same executor has not processed not even a single task in this duration.