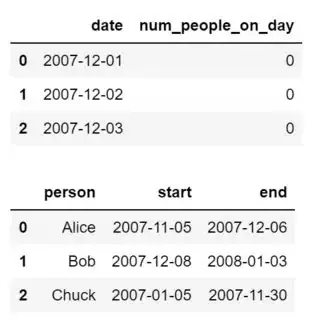

Say we have a dataframe df with a list of dates in chronological order by day.

The goal is to obtain the number of people on a given day that had a date range that included the given date.

df = pd.DataFrame(data={'date': [datetime.date(2007, 12, 1),

datetime.date(2007, 12, 2),

datetime.date(2007, 12, 3)],

'num_people_on_day': [0,0,0]})

dg = pd.DataFrame(data={'person': ['Alice', 'Bob', 'Chuck'],

'start': [datetime.date(2007, 11, 5),

datetime.date(2007, 12, 8),

datetime.date(2007, 1, 5)],

'end': [datetime.date(2007, 12, 6),

datetime.date(2008, 1, 3),

datetime.date(2007, 11, 30)]})

So for each date in df, how can I check all of dg efficiently and then count the number returned and put it into df.

I am not even sure if a merge is necessary here (also trying to save memory), and am really trying to write it to be as fast as possible.

EDIT: Ok so I have come up with a different way to do this, but I hate using apply. Is there a way to do this new way without using .apply?

import pandas as pd

import datetime

df = pd.DataFrame(data={'date': [datetime.date(2007, 12, 1),

datetime.date(2007, 12, 2),

datetime.date(2007, 12, 3)]})

dg = pd.DataFrame(data={'person': ['Alice', 'Bob', 'Chuck', 'Dave'],

'start': [datetime.date(2007, 11, 5),

datetime.date(2007, 12, 8),

datetime.date(2007, 1, 5),

datetime.date(2007, 11, 6)],

'end': [datetime.date(2007, 12, 1),

datetime.date(2008, 1, 3),

datetime.date(2007, 11, 30),

datetime.date(2007, 12, 2)]})

def get_num_persons(date, vec_starts, vec_ends):

"""

Helper function for .apply to get number of persons.

For each given date, if start and end date is

between the given date, then both results are True.

The bitwise AND then only sums these True and True values.

"""

return (((vec_starts <= date) & (vec_ends >= date)).sum())

def num_of_persons(persons, dates_df):

"""

Obtains the number of persons for each day.

"""

dates_df['num_persons'] = dates_df['date'].apply(lambda row:

get_num_persons(row,

persons['start'],

persons['end']))

return dates_df

num_of_persons(dg, df.copy())