I suspect it is Excel which reads the UTF-8 encoded CSV as ANSI. This happens when you simply open the CSV in Excel without using the text import wizard. Then Excel always expects ANSI if there is not a BOM at the beginning of the file. If you would open the CSV using a text editor which supports Unicode, all will be correct.

Example:

import java.io.BufferedWriter;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.nio.file.Files;

import java.util.Locale;

import java.util.List;

import java.util.ArrayList;

import com.opencsv.CSVWriter;

class DocxToCSV {

public static void main(String[] args) throws Exception {

Locale.setDefault(Locale.FRENCH);

List<String[]> data = new ArrayList<String[]>();

data.add(new String[]{"F1", "F2", "F3", "F4"});

data.add(new String[]{"Être un membre clé", "Être clé", "membre clé"});

data.add(new String[]{"Être", "un", "membre", "clé"});

Path path = Paths.get("test.csv");

BufferedWriter bw = Files.newBufferedWriter(path);

//bw.write(0xFEFF); bw.flush(); // write a BOM to the file

CSVWriter writer = new CSVWriter(bw, ';', '"', '"', "\r\n");

writer.writeAll(data);

writer.flush();

writer.close();

}

}

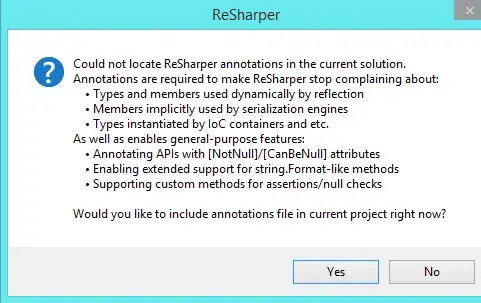

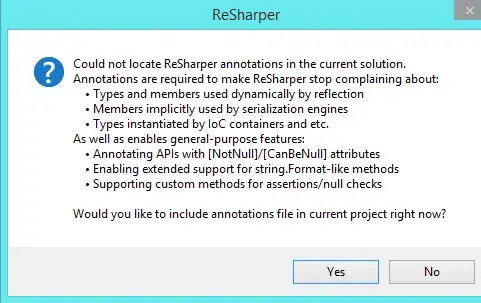

Now if you open the test.csv using a text editor which supports Unicode, all will be correct. But if you open the same file using Excel it looks like:

Now we do the same but having

bw.write(0xFEFF); bw.flush(); // write a BOM to the file

active.

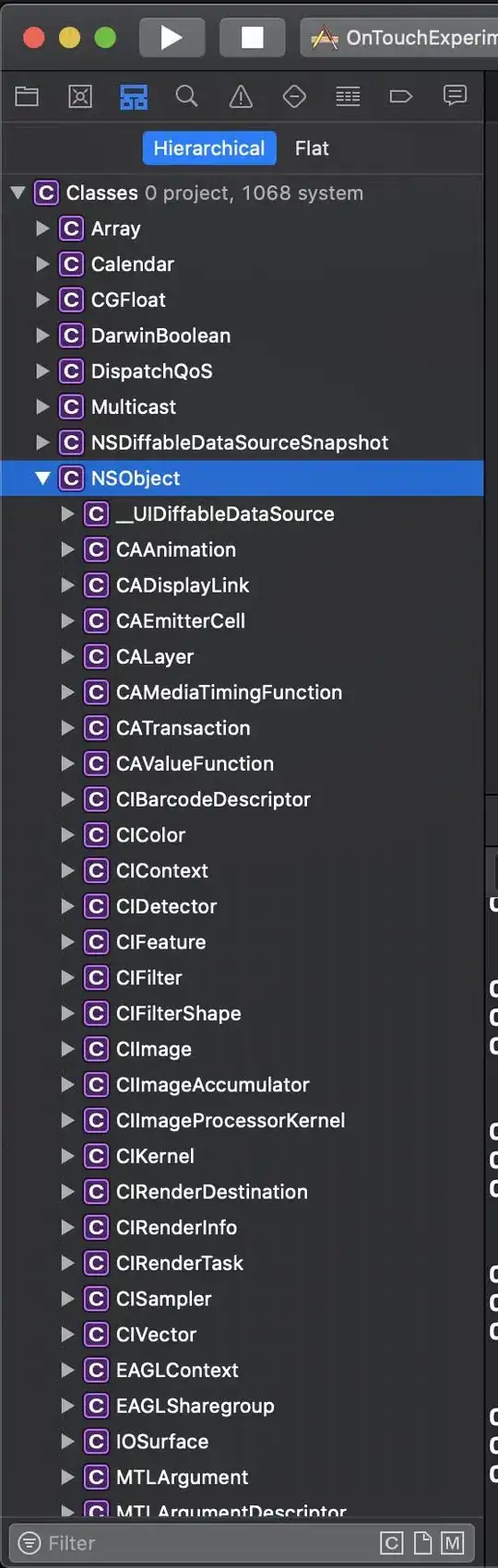

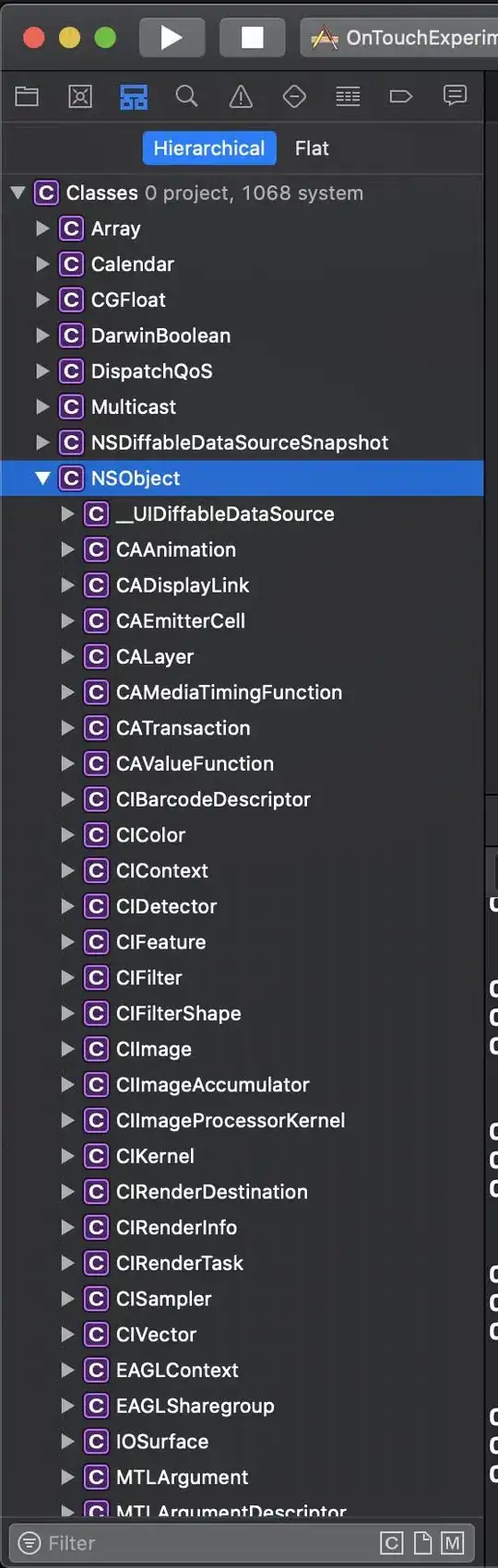

This results in Excel like this when test.csv is simply opened by Excel:

Of course the better approach is always using Excel's Text Import Wizard.

See also Javascript export CSV encoding utf-8 issue for the same problem.