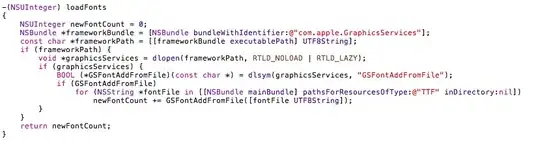

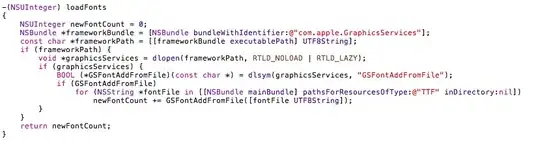

An IEEE 754 single-precision binary floating-point number has the following representation:

Here the exponent is an 8-bit unsigned integer from 0 to 255 with a bias of 127. Or, you can say it is a signed integer from −128 to 127. The number is then decoded as:

(−1)b31 (1 + Sum(b23−i 2 −i; i = 22 … 0 )) × 2e − 127

The line you mention uses the data-type float32_bits which is defined as:

union float32_bits {

unsigned int u;

float f;

};

So since float32_bits is a union, the integer u and float f occupy the same memory space. That is why when you see a notation as:

const float32_bits f16max = { (127 + 16) << 23 };

you should understand it as assigning a bit-pattern to a float. With the above explanation, you see that 127 is nothing more than the bias compensation in the above formula and the 23 is the shift to move the bits into the exponent part of the float point number.

So the variable f16max represents 216 as a floating point number f16max.f and 143 · 223 as the unsigned integer f16max.u.

Interesting reads:

Image taken from Wikipedia: Single-precision floating-point format