I was reading the book 'spark definitive guide' It has an example like below.

val myRange = spark.range(1000).toDF("number")

val divisBy2 = myRange.where("number % 2 = 0")

divisBy2.count()

Below is the description for the three lines of code.

we started a Spark job that runs our filter transformation (a narrow

transformation), then an aggregation (a wide transformation) that performs the counts on a per

partition basis, and then a collect, which brings our result to a native object in the respective

language

I know the count is an action not a transformation, since it return an actual value and I can not call 'explain' on the return value of count.

But I was wondering why the count will cause the wide transformation, how can I know the execution plan of this count in tis case since I can not invoke the 'explain' after count

Thanks.

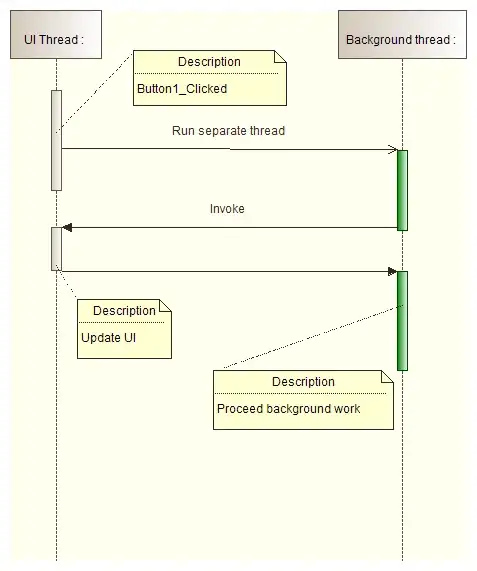

This image is the spark ui screenshot, I take it from databricks notebook, I said there is a shuffle write and read operation, does it mean that there is a wide transformation?