There are quite some posts about this specific issue, but I was unable to solve this problem. I have been experimenting with LDA on the 20newgroup corpus with both the Sklearn and Gensim implementation. It is described in the literature that perplexity usually decreases with a higher amount of topics, but I am having different results.

I have already experimented with different parameters, but in general the perplexity increases for the test set, and decreases for the train set, when increasing the amount of topics. This could indicate that the model is overfitting on the training set. But similar patterns occur when using other text data sets. Also, research specifically using this data set have experienced a decrease in perplexity. (e.g. ng20 perplexity)

I have experimented with SkLearn, Gensim and the Gensim Mallet wrapper, and all packages do show different perplexity values (which can be expected since LDA is randomly initalized + different inference algorithms), but the common pattern is that the perplexity does increase for every package, which contradicts many papers from the literature.

# imports for code sample

from sklearn.feature_extraction.text import CountVectorizer

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.decomposition import LatentDirichletAllocation

small sample code

# retrieve the data

newsgroups_all = datasets.fetch_20newsgroups(subset='all', remove=('headers', 'footers', 'quotes'), shuffle = True)

print("Extracting tf features for LDA...")

tf_vectorizer_train = CountVectorizer(max_df=0.95, min_df=2,stop_words='english')

X = tf_vectorizer_train.fit_transform(newsgroups_all.data)

X_train, X_test = train_test_split(X, test_size=0.2, random_state=42)

k = N

lda = LatentDirichletAllocation(n_components = k, doc_topic_prior = 1/k, topic_word_prior = 0.1)

lda.fit(X_train)

perp_test = lda.perplexity(X_test)

perp_train = lda.perplexity(X_train)

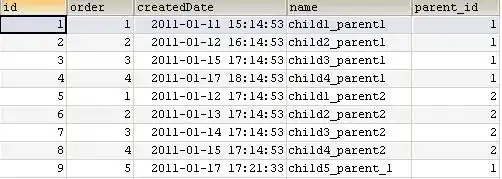

I expect all perplexities to decrease, but I am getting the following output:

k = 5, train perplexity: 5531.15, test perplexity: 7740.95

k = 10, train perplexity: 5202.80, test perplexity: 8805.57

k = 15, train perplexity: 5095.42, test perplexity: 10193.42

Edit: After running 5 fold cross validation (from 10-150, step size: 10), and averaging the perplexity per fold, the following plot is created. It seems that the perplexity for the training set only decreases between 1-15 topics, and then slightly increases when going to higher topic numbers. The perplexity of the test set constantly increases, almost lineary. Can there be a difference in perplexity calculations between sklearn/gensim implementation and research publishing a decrease of perplexity.