I am currently trying to get into neural network building via keras/tensorflow and working through some example problems. At the moment I try to understand how to properly save and load my current model via model.save()/.load(). I would expect that, should everything be set up properly, loading a pre-trained model and continue the training should not spoil my prior accuracies and simply continue exactly where I left off.

However, it doesn't. My accuracies start to largely fluctuate after I load the model and need a while to actually return to my prior accuracies:

First Run

Continued Run

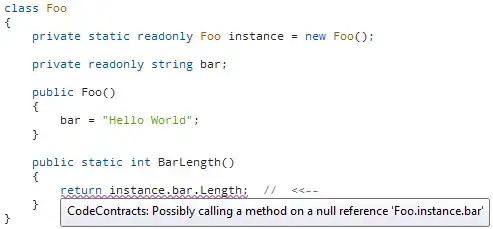

After digging through various threads with possible explanations (none of them were applicable to my findings) I think I figured out the reason:

I use tf.keras.optimizers.Adam for my weight optimization and after checking its initializer

def __init__(self, [...], **kwargs):

super(Adam, self).__init__(**kwargs)

with K.name_scope(self.__class__.__name__):

self.iterations = K.variable(0, dtype='int64', name='iterations')

[...]

def get_config(self):

config = {

'lr': float(K.get_value(self.lr)),

'beta_1': float(K.get_value(self.beta_1)),

'beta_2': float(K.get_value(self.beta_2)),

'decay': float(K.get_value(self.decay)),

'epsilon': self.epsilon,

'amsgrad': self.amsgrad

}

it seems as if the "iterations" counter is always reset to 0 and its current value is neither stored nor loaded when the entire model is saved as its not part of the config dict. This seems to contradict the statement that model.save saves "the state of the optimizer, allowing to resume training exactly where you left off." (https://keras.io/getting-started/faq/). Since the iterations counter is the one which steers the exponential "dropout" of the learning rate in the Adam algorithm

1. / (1. + self.decay * math_ops.cast(self.iterations,

K.dtype(self.decay))))

my model will always restart with the initial "large" learning rate, even if I set the "initial_epoch" parameter in model.fit() to the actual epoch number where my model was saved (see images uploaded above).

So my questions are:

- Is this intended behavior?

- If so, how is this in agreement with the cited statement from the keras FAQ that model.save() "resumes training exactly where you left off"?

- Is there a way to actually save and restore the Adam optimizer including the iterations counter without writing my own optimizer (I already discovered that this is a possible solution but I was wondering if there really is no simpler method)

Edit I found the reason/solution: I called model.compile after load_model and this resets the optimizer while keeping the weights (see also Does model.compile() initialize all the weights and biases in Keras (tensorflow backend)? )