I want to delete some data from my table, so write a query to delete it. So first I selected the data what I wanted to delete using this query and it shows me the correct data

select * from PATS.Discipline where Code like '%DHA-DIS%'

then I write the delete using same where condition but it not working

DELETE FROM PATS.Discipline WHERE Code LIKE '%DHA-DIS%'

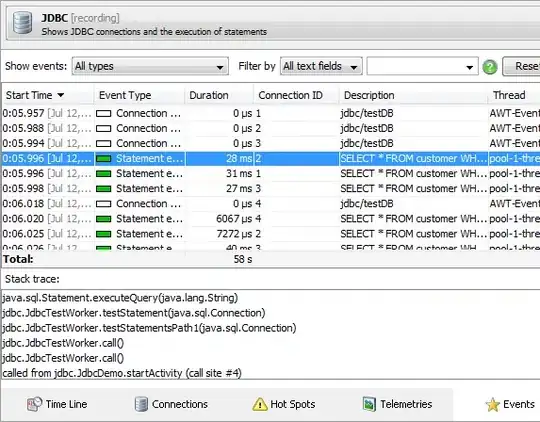

here I'm sharing some screenshots while I'm executing the query

this is the last execution result and I have waited for 1 minute and last stop the execution

Updated on 15-OCT-2019

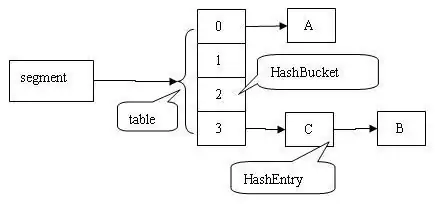

I tried the same scenario today, I imported 5000 records into the table and try to delete this 5000 records using the same query, and surprisingly it is working. I tried both cases that

1. data don't have any foreign-key exists, it's working fine. Here is the screenshot

both cases are working now, I don't know what happened on that day to SQL server